we provide High quality Google Professional-Data-Engineer practice exam which are the best for clearing Professional-Data-Engineer test, and to get certified by Google Google Professional Data Engineer Exam. The Professional-Data-Engineer Questions & Answers covers all the knowledge points of the real Professional-Data-Engineer exam. Crack your Google Professional-Data-Engineer Exam with latest dumps, guaranteed!

Online Google Professional-Data-Engineer free dumps demo Below:

NEW QUESTION 1

Your financial services company is moving to cloud technology and wants to store 50 TB of financial timeseries data in the cloud. This data is updated frequently and new data will be streaming in all the time. Your company also wants to move their existing Apache Hadoop jobs to the cloud to get insights into this data.

Which product should they use to store the data?

- A. Cloud Bigtable

- B. Google BigQuery

- C. Google Cloud Storage

- D. Google Cloud Datastore

Answer: A

Explanation:

Reference: https://cloud.google.com/bigtable/docs/schema-design-time-series

NEW QUESTION 2

You create an important report for your large team in Google Data Studio 360. The report uses Google BigQuery as its data source. You notice that visualizations are not showing data that is less than 1 hour old. What should you do?

- A. Disable caching by editing the report settings.

- B. Disable caching in BigQuery by editing table details.

- C. Refresh your browser tab showing the visualizations.

- D. Clear your browser history for the past hour then reload the tab showing the virtualizations.

Answer: A

Explanation:

Reference https://support.google.com/datastudio/answer/7020039?hl=en

NEW QUESTION 3

Which of the following statements about the Wide & Deep Learning model are true? (Select 2 answers.)

- A. The wide model is used for memorization, while the deep model is used for generalization.

- B. A good use for the wide and deep model is a recommender system.

- C. The wide model is used for generalization, while the deep model is used for memorization.

- D. A good use for the wide and deep model is a small-scale linear regression problem.

Answer: AB

Explanation:

Can we teach computers to learn like humans do, by combining the power of memorization and generalization? It's not an easy question to answer, but by jointly training a wide linear model (for memorization) alongside a deep neural network (for generalization), one can combine the strengths of both to bring us one step closer. At Google, we call it Wide & Deep Learning. It's useful for generic large-scale regression and classification problems with sparse inputs (categorical features with a large number of possible feature values), such as recommender systems, search, and ranking problems.

Reference: https://research.googleblog.com/2016/06/wide-deep-learning-better-together-with.html

NEW QUESTION 4

You have a data stored in BigQuery. The data in the BigQuery dataset must be highly available. You need to define a storage, backup, and recovery strategy of this data that minimizes cost. How should you configure the BigQuery table?

- A. Set the BigQuery dataset to be regiona

- B. In the event of an emergency, use a point-in-time snapshot to recover the data.

- C. Set the BigQuery dataset to be regiona

- D. Create a scheduled query to make copies of the data to tables suffixed with the time of the backu

- E. In the event of an emergency, use the backup copy of the table.

- F. Set the BigQuery dataset to be multi-regiona

- G. In the event of an emergency, use a point-in-time snapshot to recover the data.

- H. Set the BigQuery dataset to be multi-regiona

- I. Create a scheduled query to make copies of the data to tables suffixed with the time of the backu

- J. In the event of an emergency, use the backup copy of the table.

Answer: B

NEW QUESTION 5

Which role must be assigned to a service account used by the virtual machines in a Dataproc cluster so they can execute jobs?

- A. Dataproc Worker

- B. Dataproc Viewer

- C. Dataproc Runner

- D. Dataproc Editor

Answer: A

Explanation:

Service accounts used with Cloud Dataproc must have Dataproc/Dataproc Worker role (or have all the permissions granted by Dataproc Worker role).

Reference: https://cloud.google.com/dataproc/docs/concepts/service-accounts#important_notes

NEW QUESTION 6

Which of these is NOT a way to customize the software on Dataproc cluster instances?

- A. Set initialization actions

- B. Modify configuration files using cluster properties

- C. Configure the cluster using Cloud Deployment Manager

- D. Log into the master node and make changes from there

Answer: C

Explanation:

You can access the master node of the cluster by clicking the SSH button next to it in the Cloud Console.

You can easily use the --properties option of the dataproc command in the Google Cloud SDK to modify many common configuration files when creating a cluster.

When creating a Cloud Dataproc cluster, you can specify initialization actions in executables and/or scripts that Cloud Dataproc will run on all nodes in your Cloud Dataproc cluster immediately after the cluster is set up. [https://cloud.google.com/dataproc/docs/concepts/configuring-clusters/init-actions]

Reference: https://cloud.google.com/dataproc/docs/concepts/configuring-clusters/cluster-properties

NEW QUESTION 7

Suppose you have a table that includes a nested column called "city" inside a column called "person", but when you try to submit the following query in BigQuery, it gives you an error.

SELECT person FROM `project1.example.table1` WHERE city = "London"

How would you correct the error?

- A. Add ", UNNEST(person)" before the WHERE clause.

- B. Change "person" to "person.city".

- C. Change "person" to "city.person".

- D. Add ", UNNEST(city)" before the WHERE clause.

Answer: A

Explanation:

To access the person.city column, you need to "UNNEST(person)" and JOIN it to table1 using a comma. Reference:

https://cloud.google.com/bigquery/docs/reference/standard-sql/migrating-from-legacy-sql#nested_repeated_resu

NEW QUESTION 8

What are the minimum permissions needed for a service account used with Google Dataproc?

- A. Execute to Google Cloud Storage; write to Google Cloud Logging

- B. Write to Google Cloud Storage; read to Google Cloud Logging

- C. Execute to Google Cloud Storage; execute to Google Cloud Logging

- D. Read and write to Google Cloud Storage; write to Google Cloud Logging

Answer: D

Explanation:

Service accounts authenticate applications running on your virtual machine instances to other Google Cloud Platform services. For example, if you write an application that reads and writes files on Google Cloud Storage, it must first authenticate to the Google Cloud Storage API. At a minimum, service accounts used with Cloud Dataproc need permissions to read and write to Google Cloud Storage, and to write to Google Cloud Logging.

Reference: https://cloud.google.com/dataproc/docs/concepts/service-accounts#important_notes

NEW QUESTION 9

Your globally distributed auction application allows users to bid on items. Occasionally, users place identical bids at nearly identical times, and different application servers process those bids. Each bid event contains the item, amount, user, and timestamp. You want to collate those bid events into a single location in real time to determine which user bid first. What should you do?

- A. Create a file on a shared file and have the application servers write all bid events to that fil

- B. Process the file with Apache Hadoop to identify which user bid first.

- C. Have each application server write the bid events to Cloud Pub/Sub as they occu

- D. Push the events from Cloud Pub/Sub to a custom endpoint that writes the bid event information into Cloud SQL.

- E. Set up a MySQL database for each application server to write bid events int

- F. Periodically query each of those distributed MySQL databases and update a master MySQL database with bid event information.

- G. Have each application server write the bid events to Google Cloud Pub/Sub as they occu

- H. Use a pull subscription to pull the bid events using Google Cloud Dataflo

- I. Give the bid for each item to the userIn the bid event that is processed first.

Answer: C

NEW QUESTION 10

You are planning to use Google's Dataflow SDK to analyze customer data such as displayed below. Your project requirement is to extract only the customer name from the data source and then write to an output PCollection.

Tom,555 X street Tim,553 Y street Sam, 111 Z street

Which operation is best suited for the above data processing requirement?

- A. ParDo

- B. Sink API

- C. Source API

- D. Data extraction

Answer: A

Explanation:

In Google Cloud dataflow SDK, you can use the ParDo to extract only a customer name of each element in your PCollection.

Reference: https://cloud.google.com/dataflow/model/par-do

NEW QUESTION 11

You have a requirement to insert minute-resolution data from 50,000 sensors into a BigQuery table. You expect significant growth in data volume and need the data to be available within 1 minute of ingestion for real-time analysis of aggregated trends. What should you do?

- A. Use bq load to load a batch of sensor data every 60 seconds.

- B. Use a Cloud Dataflow pipeline to stream data into the BigQuery table.

- C. Use the INSERT statement to insert a batch of data every 60 seconds.

- D. Use the MERGE statement to apply updates in batch every 60 seconds.

Answer: C

NEW QUESTION 12

You operate a database that stores stock trades and an application that retrieves average stock price for a given company over an adjustable window of time. The data is stored in Cloud Bigtable where the datetime of the stock trade is the beginning of the row key. Your application has thousands of concurrent users, and you notice that performance is starting to degrade as more stocks are added. What should you do to improve the performance of your application?

- A. Change the row key syntax in your Cloud Bigtable table to begin with the stock symbol.

- B. Change the row key syntax in your Cloud Bigtable table to begin with a random number per second.

- C. Change the data pipeline to use BigQuery for storing stock trades, and update your application.

- D. Use Cloud Dataflow to write summary of each day’s stock trades to an Avro file on Cloud Storage.Update your application to read from Cloud Storage and Cloud Bigtable to compute the responses.

Answer: A

NEW QUESTION 13

You designed a database for patient records as a pilot project to cover a few hundred patients in three clinics. Your design used a single database table to represent all patients and their visits, and you used self-joins to generate reports. The server resource utilization was at 50%. Since then, the scope of the project has expanded. The database must now store 100 times more patient records. You can no longer run the reports, because they either take too long or they encounter errors with insufficient compute resources. How should you adjust the database design?

- A. Add capacity (memory and disk space) to the database server by the order of 200.

- B. Shard the tables into smaller ones based on date ranges, and only generate reports with prespecified date ranges.

- C. Normalize the master patient-record table into the patient table and the visits table, and create othernecessary tables to avoid self-join.

- D. Partition the table into smaller tables, with one for each clini

- E. Run queries against the smaller table pairs, and use unions for consolidated reports.

Answer: B

NEW QUESTION 14

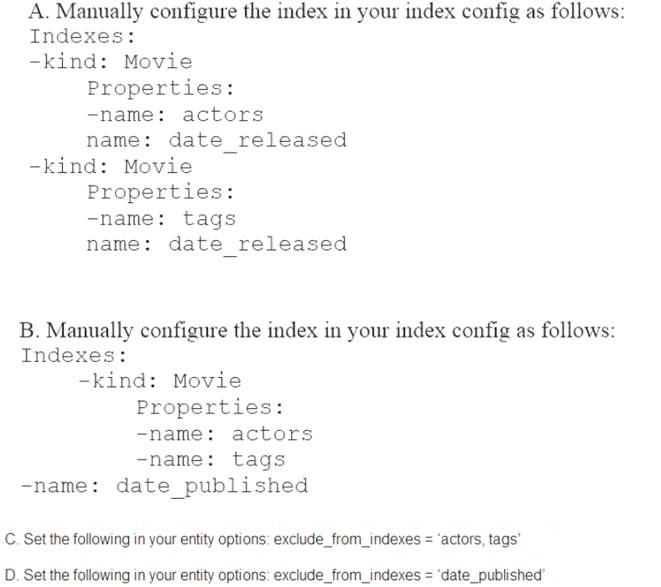

You are deploying a new storage system for your mobile application, which is a media streaming service. You decide the best fit is Google Cloud Datastore. You have entities with multiple properties, some of which can take on multiple values. For example, in the entity ‘Movie’ the property ‘actors’ and the property ‘tags’ have multiple values but the property ‘date released’ does not. A typical query would ask for all movies with actor=<actorname> ordered by date_released or all movies with tag=Comedy ordered by date_released. How should you avoid a combinatorial explosion in the number of indexes?

- A. Option A

- B. Option B.

- C. Option C

- D. Option D

Answer: A

NEW QUESTION 15

You are working on a sensitive project involving private user data. You have set up a project on Google Cloud Platform to house your work internally. An external consultant is going to assist with coding a complex transformation in a Google Cloud Dataflow pipeline for your project. How should you maintain users’ privacy?

- A. Grant the consultant the Viewer role on the project.

- B. Grant the consultant the Cloud Dataflow Developer role on the project.

- C. Create a service account and allow the consultant to log on with it.

- D. Create an anonymized sample of the data for the consultant to work with in a different project.

Answer: C

NEW QUESTION 16

What is the recommended action to do in order to switch between SSD and HDD storage for your Google Cloud Bigtable instance?

- A. create a third instance and sync the data from the two storage types via batch jobs

- B. export the data from the existing instance and import the data into a new instance

- C. run parallel instances where one is HDD and the other is SDD

- D. the selection is final and you must resume using the same storage type

Answer: B

Explanation:

When you create a Cloud Bigtable instance and cluster, your choice of SSD or HDD storage for the cluster is permanent. You cannot use the Google Cloud Platform Console to change the type of storage that is used for the cluster.

If you need to convert an existing HDD cluster to SSD, or vice-versa, you can export the data from the existing instance and import the data into a new instance. Alternatively, you can write

a Cloud Dataflow or Hadoop MapReduce job that copies the data from one instance to another. Reference: https://cloud.google.com/bigtable/docs/choosing-ssd-hdd–

NEW QUESTION 17

You are migrating your data warehouse to BigQuery. You have migrated all of your data into tables in a dataset. Multiple users from your organization will be using the data. They should only see certain tables based on their team membership. How should you set user permissions?

- A. Assign the users/groups data viewer access at the table level for each table

- B. Create SQL views for each team in the same dataset in which the data resides, and assign the users/groups data viewer access to the SQL views

- C. Create authorized views for each team in the same dataset in which the data resides, and assign theusers/groups data viewer access to the authorized views

- D. Create authorized views for each team in datasets created for each tea

- E. Assign the authorized views data viewer access to the dataset in which the data reside

- F. Assign the users/groups data viewer access to the datasets in which the authorized views reside

Answer: C

NEW QUESTION 18

You are integrating one of your internal IT applications and Google BigQuery, so users can query BigQuery from the application’s interface. You do not want individual users to authenticate to BigQuery and you do not want to give them access to the dataset. You need to securely access BigQuery from your IT application.

What should you do?

- A. Create groups for your users and give those groups access to the dataset

- B. Integrate with a single sign-on (SSO) platform, and pass each user’s credentials along with the query request

- C. Create a service account and grant dataset access to that accoun

- D. Use the service account’s private key to access the dataset

- E. Create a dummy user and grant dataset access to that use

- F. Store the username and password for that user in a file on the files system, and use those credentials to access the BigQuery dataset

Answer: C

NEW QUESTION 19

If you're running a performance test that depends upon Cloud Bigtable, all the choices except one below are recommended steps. Which is NOT a recommended step to follow?

- A. Do not use a production instance.

- B. Run your test for at least 10 minutes.

- C. Before you test, run a heavy pre-test for several minutes.

- D. Use at least 300 GB of data.

Answer: A

Explanation:

If you're running a performance test that depends upon Cloud Bigtable, be sure to follow these steps as you

plan and execute your test:

Use a production instance. A development instance will not give you an accurate sense of how a production instance performs under load.

Use at least 300 GB of data. Cloud Bigtable performs best with 1 TB or more of data. However, 300 GB of data is enough to provide reasonable results in a performance test on a 3-node cluster. On larger clusters, use 100 GB of data per node.

Before you test, run a heavy pre-test for several minutes. This step gives Cloud Bigtable a chance to balance data across your nodes based on the access patterns it observes.

Run your test for at least 10 minutes. This step lets Cloud Bigtable further optimize your data, and it helps ensure that you will test reads from disk as well as cached reads from memory.

Reference: https://cloud.google.com/bigtable/docs/performance

NEW QUESTION 20

You work for a car manufacturer and have set up a data pipeline using Google Cloud Pub/Sub to capture anomalous sensor events. You are using a push subscription in Cloud Pub/Sub that calls a custom HTTPS endpoint that you have created to take action of these anomalous events as they occur. Your custom HTTPS endpoint keeps getting an inordinate amount of duplicate messages. What is the most likely cause of these duplicate messages?

- A. The message body for the sensor event is too large.

- B. Your custom endpoint has an out-of-date SSL certificate.

- C. The Cloud Pub/Sub topic has too many messages published to it.

- D. Your custom endpoint is not acknowledging messages within the acknowledgement deadline.

Answer: B

NEW QUESTION 21

Your company produces 20,000 files every hour. Each data file is formatted as a comma separated values (CSV) file that is less than 4 KB. All files must be ingested on Google Cloud Platform before they can be processed. Your company site has a 200 ms latency to Google Cloud, and your Internet connection bandwidth is limited as 50 Mbps. You currently deploy a secure FTP (SFTP) server on a virtual machine in Google Compute Engine as the data ingestion point. A local SFTP client runs on a dedicated machine to transmit the CSV files as is. The goal is to make reports with data from the previous day available to the executives by 10:00 a.m. each day. This design is barely able to keep up with the current volume, even though the bandwidth utilization is rather low.

You are told that due to seasonality, your company expects the number of files to double for the next three months. Which two actions should you take? (choose two.)

- A. Introduce data compression for each file to increase the rate file of file transfer.

- B. Contact your internet service provider (ISP) to increase your maximum bandwidth to at least 100 Mbps.

- C. Redesign the data ingestion process to use gsutil tool to send the CSV files to a storage bucket in parallel.

- D. Assemble 1,000 files into a tape archive (TAR) fil

- E. Transmit the TAR files instead, and disassemble the CSV files in the cloud upon receiving them.

- F. Create an S3-compatible storage endpoint in your network, and use Google Cloud Storage Transfer Service to transfer on-premices data to the designated storage bucket.

Answer: CE

NEW QUESTION 22

......

Thanks for reading the newest Professional-Data-Engineer exam dumps! We recommend you to try the PREMIUM Certleader Professional-Data-Engineer dumps in VCE and PDF here: https://www.certleader.com/Professional-Data-Engineer-dumps.html (239 Q&As Dumps)