Cause all that matters here is passing the Google Professional-Data-Engineer exam. Cause all that you need is a high score of Professional-Data-Engineer Google Professional Data Engineer Exam exam. The only one thing you need to do is downloading Ucertify Professional-Data-Engineer exam study guides now. We will not let you down with our money-back guarantee.

Free demo questions for Google Professional-Data-Engineer Exam Dumps Below:

NEW QUESTION 1

What are two of the benefits of using denormalized data structures in BigQuery?

- A. Reduces the amount of data processed, reduces the amount of storage required

- B. Increases query speed, makes queries simpler

- C. Reduces the amount of storage required, increases query speed

- D. Reduces the amount of data processed, increases query speed

Answer: B

Explanation:

Denormalization increases query speed for tables with billions of rows because BigQuery's performance degrades when doing JOINs on large tables, but with a denormalized data

structure, you don't have to use JOINs, since all of the data has been combined into one table. Denormalization also makes queries simpler because you do not have to use JOIN clauses.

Denormalization increases the amount of data processed and the amount of storage required because it creates redundant data.

Reference:

https://cloud.google.com/solutions/bigquery-data-warehouse#denormalizing_data

NEW QUESTION 2

You are building a data pipeline on Google Cloud. You need to prepare data using a casual method for a machine-learning process. You want to support a logistic regression model. You also need to monitor and adjust for null values, which must remain real-valued and cannot be removed. What should you do?

- A. Use Cloud Dataprep to find null values in sample source dat

- B. Convert all nulls to ‘none’ using a Cloud Dataproc job.

- C. Use Cloud Dataprep to find null values in sample source dat

- D. Convert all nulls to 0 using a Cloud Dataprep job.

- E. Use Cloud Dataflow to find null values in sample source dat

- F. Convert all nulls to ‘none’ using a Cloud Dataprep job.

- G. Use Cloud Dataflow to find null values in sample source dat

- H. Convert all nulls to using a custom script.

Answer: C

NEW QUESTION 3

You are managing a Cloud Dataproc cluster. You need to make a job run faster while minimizing costs, without losing work in progress on your clusters. What should you do?

- A. Increase the cluster size with more non-preemptible workers.

- B. Increase the cluster size with preemptible worker nodes, and configure them to forcefully decommission.

- C. Increase the cluster size with preemptible worker nodes, and use Cloud Stackdriver to trigger a script to preserve work.

- D. Increase the cluster size with preemptible worker nodes, and configure them to use graceful decommissioning.

Answer: D

Explanation:

Reference https://cloud.google.com/dataproc/docs/concepts/configuring-clusters/flex

NEW QUESTION 4

You want to analyze hundreds of thousands of social media posts daily at the lowest cost and with the fewest steps.

You have the following requirements: You will batch-load the posts once per day and run them through the Cloud Natural Language API.

You will batch-load the posts once per day and run them through the Cloud Natural Language API.  You will extract topics and sentiment from the posts.

You will extract topics and sentiment from the posts. You must store the raw posts for archiving and reprocessing.

You must store the raw posts for archiving and reprocessing. You will create dashboards to be shared with people both inside and outside your organization.

You will create dashboards to be shared with people both inside and outside your organization.

You need to store both the data extracted from the API to perform analysis as well as the raw social media posts for historical archiving. What should you do?

- A. Store the social media posts and the data extracted from the API in BigQuery.

- B. Store the social media posts and the data extracted from the API in Cloud SQL.

- C. Store the raw social media posts in Cloud Storage, and write the data extracted from the API into BigQuery.

- D. Feed to social media posts into the API directly from the source, and write the extracted data from the API into BigQuery.

Answer: D

NEW QUESTION 5

You have a data pipeline that writes data to Cloud Bigtable using well-designed row keys. You want to monitor your pipeline to determine when to increase the size of you Cloud Bigtable cluster. Which two actions can you take to accomplish this? Choose 2 answers.

- A. Review Key Visualizer metric

- B. Increase the size of the Cloud Bigtable cluster when the Read pressure index is above 100.

- C. Review Key Visualizer metric

- D. Increase the size of the Cloud Bigtable cluster when the Write pressure index is above 100.

- E. Monitor the latency of write operation

- F. Increase the size of the Cloud Bigtable cluster when there is a sustained increase in write latency.

- G. Monitor storage utilizatio

- H. Increase the size of the Cloud Bigtable cluster when utilization increases above 70% of max capacity.

- I. Monitor latency of read operation

- J. Increase the size of the Cloud Bigtable cluster of read operations take longer than 100 ms.

Answer: AC

NEW QUESTION 6

You are a retailer that wants to integrate your online sales capabilities with different in-home assistants, such as Google Home. You need to interpret customer voice commands and issue an order to the backend systems. Which solutions should you choose?

- A. Cloud Speech-to-Text API

- B. Cloud Natural Language API

- C. Dialogflow Enterprise Edition

- D. Cloud AutoML Natural Language

Answer: D

NEW QUESTION 7

Business owners at your company have given you a database of bank transactions. Each row contains the user ID, transaction type, transaction location, and transaction amount. They ask you to investigate what type of machine learning can be applied to the data. Which three machine learning applications can you use? (Choose three.)

- A. Supervised learning to determine which transactions are most likely to be fraudulent.

- B. Unsupervised learning to determine which transactions are most likely to be fraudulent.

- C. Clustering to divide the transactions into N categories based on feature similarity.

- D. Supervised learning to predict the location of a transaction.

- E. Reinforcement learning to predict the location of a transaction.

- F. Unsupervised learning to predict the location of a transaction.

Answer: BCE

NEW QUESTION 8

You are implementing security best practices on your data pipeline. Currently, you are manually executing jobs as the Project Owner. You want to automate these jobs by taking nightly batch files containing non-public information from Google Cloud Storage, processing them with a Spark Scala job on a Google Cloud Dataproc cluster, and depositing the results into Google BigQuery.

How should you securely run this workload?

- A. Restrict the Google Cloud Storage bucket so only you can see the files

- B. Grant the Project Owner role to a service account, and run the job with it

- C. Use a service account with the ability to read the batch files and to write to BigQuery

- D. Use a user account with the Project Viewer role on the Cloud Dataproc cluster to read the batch files and write to BigQuery

Answer: B

NEW QUESTION 9

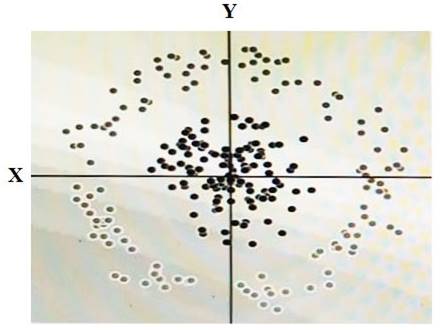

You have some data, which is shown in the graphic below. The two dimensions are X and Y, and the shade of each dot represents what class it is. You want to classify this data accurately using a linear algorithm.

To do this you need to add a synthetic feature. What should the value of that feature be?

- A. X^2+Y^2

- B. X^2

- C. Y^2

- D. cos(X)

Answer: D

NEW QUESTION 10

Your team is working on a binary classification problem. You have trained a support vector machine (SVM) classifier with default parameters, and received an area under the Curve (AUC) of 0.87 on the validation set. You want to increase the AUC of the model. What should you do?

- A. Perform hyperparameter tuning

- B. Train a classifier with deep neural networks, because neural networks would always beat SVMs

- C. Deploy the model and measure the real-world AUC; it’s always higher because of generalization

- D. Scale predictions you get out of the model (tune a scaling factor as a hyperparameter) in order to get the highest AUC

Answer: D

NEW QUESTION 11

You are designing a basket abandonment system for an ecommerce company. The system will send a message to a user based on these rules: No interaction by the user on the site for 1 hour

No interaction by the user on the site for 1 hour Has added more than $30 worth of products to the basket

Has added more than $30 worth of products to the basket  Has not completed a transaction

Has not completed a transaction

You use Google Cloud Dataflow to process the data and decide if a message should be sent. How should you design the pipeline?

- A. Use a fixed-time window with a duration of 60 minutes.

- B. Use a sliding time window with a duration of 60 minutes.

- C. Use a session window with a gap time duration of 60 minutes.

- D. Use a global window with a time based trigger with a delay of 60 minutes.

Answer: D

NEW QUESTION 12

To run a TensorFlow training job on your own computer using Cloud Machine Learning Engine, what would your command start with?

- A. gcloud ml-engine local train

- B. gcloud ml-engine jobs submit training

- C. gcloud ml-engine jobs submit training local

- D. You can't run a TensorFlow program on your own computer using Cloud ML Engine .

Answer: A

Explanation:

gcloud ml-engine local train - run a Cloud ML Engine training job locally

This command runs the specified module in an environment similar to that of a live Cloud ML Engine Training Job.

This is especially useful in the case of testing distributed models, as it allows you to validate that you are

properly interacting with the Cloud ML Engine cluster configuration. Reference: https://cloud.google.com/sdk/gcloud/reference/ml-engine/local/train

NEW QUESTION 13

Your company is selecting a system to centralize data ingestion and delivery. You are considering messaging and data integration systems to address the requirements. The key requirements are: The ability to seek to a particular offset in a topic, possibly back to the start of all data ever captured

The ability to seek to a particular offset in a topic, possibly back to the start of all data ever captured  Support for publish/subscribe semantics on hundreds of topics

Support for publish/subscribe semantics on hundreds of topics Retain per-key ordering Which system should you choose?

Retain per-key ordering Which system should you choose?

- A. Apache Kafka

- B. Cloud Storage

- C. Cloud Pub/Sub

- D. Firebase Cloud Messaging

Answer: A

NEW QUESTION 14

You are a head of BI at a large enterprise company with multiple business units that each have different priorities and budgets. You use on-demand pricing for BigQuery with a quota of 2K concurrent on-demand slots per project. Users at your organization sometimes don’t get slots to execute their query and you need to correct this. You’d like to avoid introducing new projects to your account.

What should you do?

- A. Convert your batch BQ queries into interactive BQ queries.

- B. Create an additional project to overcome the 2K on-demand per-project quota.

- C. Switch to flat-rate pricing and establish a hierarchical priority model for your projects.

- D. Increase the amount of concurrent slots per project at the Quotas page at the Cloud Console.

Answer: C

Explanation:

Reference https://cloud.google.com/blog/products/gcp/busting-12-myths-about-bigquery

NEW QUESTION 15

Cloud Dataproc charges you only for what you really use with billing.

- A. month-by-month

- B. minute-by-minute

- C. week-by-week

- D. hour-by-hour

Answer: B

Explanation:

One of the advantages of Cloud Dataproc is its low cost. Dataproc charges for what you really use with minute-by-minute billing and a low, ten-minute-minimum billing period.

Reference: https://cloud.google.com/dataproc/docs/concepts/overview

NEW QUESTION 16

Each analytics team in your organization is running BigQuery jobs in their own projects. You want to enable each team to monitor slot usage within their projects. What should you do?

- A. Create a Stackdriver Monitoring dashboard based on the BigQuery metric query/scanned_bytes

- B. Create a Stackdriver Monitoring dashboard based on the BigQuery metric slots/allocated_for_project

- C. Create a log export for each project, capture the BigQuery job execution logs, create a custom metric based on the totalSlotMs, and create a Stackdriver Monitoring dashboard based on the custom metric

- D. Create an aggregated log export at the organization level, capture the BigQuery job execution logs, create a custom metric based on the totalSlotMs, and create a Stackdriver Monitoring dashboard based on the custom metric

Answer: D

NEW QUESTION 17

Your company is running their first dynamic campaign, serving different offers by analyzing real-time data during the holiday season. The data scientists are collecting terabytes of data that rapidly grows every hour during their 30-day campaign. They are using Google Cloud Dataflow to preprocess the data and collect the feature (signals) data that is needed for the machine learning model in Google Cloud Bigtable. The team is observing suboptimal performance with reads and writes of their initial load of 10 TB of data. They want to improve this performance while minimizing cost. What should they do?

- A. Redefine the schema by evenly distributing reads and writes across the row space of the table.

- B. The performance issue should be resolved over time as the site of the BigDate cluster is increased.

- C. Redesign the schema to use a single row key to identify values that need to be updated frequently in the cluster.

- D. Redesign the schema to use row keys based on numeric IDs that increase sequentially per user viewing the offers.

Answer: A

NEW QUESTION 18

You have several Spark jobs that run on a Cloud Dataproc cluster on a schedule. Some of the jobs run in sequence, and some of the jobs run concurrently. You need to automate this process. What should you do?

- A. Create a Cloud Dataproc Workflow Template

- B. Create an initialization action to execute the jobs

- C. Create a Directed Acyclic Graph in Cloud Composer

- D. Create a Bash script that uses the Cloud SDK to create a cluster, execute jobs, and then tear down the cluster

Answer: A

NEW QUESTION 19

You are planning to migrate your current on-premises Apache Hadoop deployment to the cloud. You need to ensure that the deployment is as fault-tolerant and cost-effective as possible for long-running batch jobs. You want to use a managed service. What should you do?

- A. Deploy a Cloud Dataproc cluste

- B. Use a standard persistent disk and 50% preemptible worker

- C. Store data in Cloud Storage, and change references in scripts from hdfs:// to gs://

- D. Deploy a Cloud Dataproc cluste

- E. Use an SSD persistent disk and 50% preemptible worker

- F. Store data in Cloud Storage, and change references in scripts from hdfs:// to gs://

- G. Install Hadoop and Spark on a 10-node Compute Engine instance group with standard instance

- H. Install the Cloud Storage connector, and store the data in Cloud Storag

- I. Change references in scripts from hdfs:// to gs://

- J. Install Hadoop and Spark on a 10-node Compute Engine instance group with preemptible instances.Store data in HDF

- K. Change references in scripts from hdfs:// to gs://

Answer: A

NEW QUESTION 20

You need to choose a database to store time series CPU and memory usage for millions of computers. You need to store this data in one-second interval samples. Analysts will be performing real-time, ad hoc analytics against the database. You want to avoid being charged for every query executed and ensure that the schema design will allow for future growth of the dataset. Which database and data model should you choose?

- A. Create a table in BigQuery, and append the new samples for CPU and memory to the table

- B. Create a wide table in BigQuery, create a column for the sample value at each second, and update the row with the interval for each second

- C. Create a narrow table in Cloud Bigtable with a row key that combines the Computer Engine computer identifier with the sample time at each second

- D. Create a wide table in Cloud Bigtable with a row key that combines the computer identifier with the sample time at each minute, and combine the values for each second as column data.

Answer: D

NEW QUESTION 21

You receive data files in CSV format monthly from a third party. You need to cleanse this data, but every third month the schema of the files changes. Your requirements for implementing these transformations include: Executing the transformations on a schedule

Executing the transformations on a schedule Enabling non-developer analysts to modify transformations

Enabling non-developer analysts to modify transformations  Providing a graphical tool for designing transformations

Providing a graphical tool for designing transformations

What should you do?

- A. Use Cloud Dataprep to build and maintain the transformation recipes, and execute them on a scheduled basis

- B. Load each month’s CSV data into BigQuery, and write a SQL query to transform the data to a standard schem

- C. Merge the transformed tables together with a SQL query

- D. Help the analysts write a Cloud Dataflow pipeline in Python to perform the transformatio

- E. The Python code should be stored in a revision control system and modified as the incoming data’s schema changes

- F. Use Apache Spark on Cloud Dataproc to infer the schema of the CSV file before creating a Dataframe.Then implement the transformations in Spark SQL before writing the data out to Cloud Storage and loading into BigQuery

Answer: D

NEW QUESTION 22

......

Thanks for reading the newest Professional-Data-Engineer exam dumps! We recommend you to try the PREMIUM DumpSolutions.com Professional-Data-Engineer dumps in VCE and PDF here: https://www.dumpsolutions.com/Professional-Data-Engineer-dumps/ (239 Q&As Dumps)