It is impossible to pass Amazon-Web-Services DOP-C01 exam without any help in the short term. Come to Exambible soon and find the most advanced, correct and guaranteed Amazon-Web-Services DOP-C01 practice questions. You will get a surprising result by our Down to date AWS Certified DevOps Engineer- Professional practice guides.

Online Amazon-Web-Services DOP-C01 free dumps demo Below:

NEW QUESTION 1

You have an application running on an Amazon EC2 instance and you are using 1AM roles to securely access AWS Service APIs. How can you configure your application running on that instance to retrieve the API keys for use with the AWS SDKs?

- A. When assigning an EC21AM role to your instance in the console, in the "Chosen SDK" drop-down list, select the SDK that you are using, and the instance will configure the correct SDK on launch with the API keys.

- B. Within your application code, make a GET request to the 1AM Service API to retrieve credentials for your user.

- C. When using AWS SDKs and Amazon EC2 roles, you do not have to explicitly retrieve API keys, because the SDK handles retrieving them from the Amazon EC2 MetaData service.

- D. Within your application code, configure the AWS SDK to get the API keys from environment variables, because assigning an Amazon EC2 role stores keys in environment variables on launch.

Answer: C

Explanation:

IAM roles are designed so that your applications can securely make API requests from your instances, without requiring you to manage the security credentials that

the applications use. Instead of creating and distributing your AWS credentials, you can delegate permission to make API requests using 1AM roles

For more information on Roles for CC2 please refer to the below link: http://docs.aws.amazon.com/AWSCC2/latest/UserGuide/iam-roles-for-amazon-ec2.html

NEW QUESTION 2

If I want Cloud Formation stack status updates to show up in a continuous delivery system in as close to real time as possible, how should I achieve this?

- A. Use a long-poll on the Resources object in your Cloud Formation stack and display those state changes in the Ul for the system.

- B. Use a long-poll on the ListStacksAPI call for your CloudFormation stack and display those state changes in the Ul for the system.

- C. Subscribe your continuous delivery system to an SNS topic that you also tell your CloudFormation stack to publish events int

- D. Subscribe your continuous delivery system to an SQS queue that you also tell your CloudFormation stack to publish events into.

Answer: C

Explanation:

Answer - C

You can monitor the progress of a stack update by viewing the stack's events. The console's Cvents tab displays each major step in the creation and update of the stack sorted by the time of each event with latest events on top. The start of the stack update process is marked with an UPDATE_IN_PROGRCSS event for the stack For more information on Monitoring your stack, please visit the below URL:

http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/using-cfn-updating-stacks- monitor-stack. html

NEW QUESTION 3

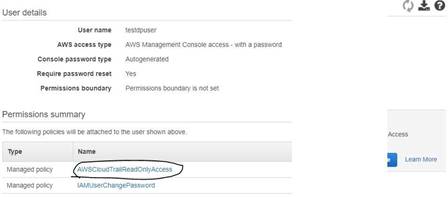

An audit is going to be conducted for your company's AWS account. Which of the following steps will ensure that the auditor has the right access to the logs of your AWS account

- A. Enable S3 and ELB log

- B. Send the logs as a zip file to the IT Auditor.

- C. Ensure CloudTrail is enable

- D. Create a user account for the Auditor and attach the AWSCLoudTrailReadOnlyAccess Policy to the user.

- E. Ensure that Cloudtrail is enable

- F. Create a user for the IT Auditor and ensure that full control is given to the userfor Cloudtrail.D- Enable Cloudwatch log

- G. Create a user for the IT Auditor and ensure that full control is given to the userfor the Cloudwatch logs.

Answer: B

Explanation:

The AWS Documentation clearly mentions the below

AWS CloudTrail is an AWS service that helps you enable governance, compliance, and operational and risk auditing of your AWS account. Actions taken by a user,

role, or an AWS service are recorded as events in CloudTrail. Events include actions taken in the AWS Management Console, AWS Command Line Interface, and AWS SDKs and APIs.

For more information on Cloudtrail, please visit the below URL:

• http://docs.aws.amazon.com/awscloudtrail/latest/userguide/cloudtrail-user-guide.html

NEW QUESTION 4

A company wants to create standard templates for deployment of their Infrastructure. Which AWS service can be used in this regard? Please choose one option.

- A. AmazonSimple Workflow Service

- B. AWSEIastic Beanstalk

- C. AWSCIoudFormation

- D. AWSOpsWorks

Answer: C

Explanation:

AWS Cloud Formation gives developers and systems administrators an easy way to create and manage a collection of related AWS resources, provisioning and updating them in an orderly and predictable fashion.

You can use AWS Cloud Formation's sample templates or create your own templates to describe the AWS resources, and any associated dependencies or runtime parameters, required to run your application. You don't need to figure out the order for provisioning AWS services or the subtleties of making those dependencies work. Cloud Formation takes care of this for you. After the AWS resources are deployed, you can modify and update them in a controlled and predictable way, in effect applying version control to your AWS infrastructure the same way you do with your software. You can also visualize your templates as diagrams and edit them using a drag-and-drop interface with the AWS CloudFormation Designer.

For more information on Cloudformation, please visit the link:

• https://aws.amazon.com/cloudformation/

NEW QUESTION 5

You have enabled Elastic Load Balancing HTTP health checking. After looking at the AWS Management Console, you see that all instances are passing health checks, but your customers are reporting that your site is not responding. What is the cause?

- A. The HTTP health checking system is misreportingdue to latency in inter-instance metadata synchronization.

- B. The health check in place is not sufficiently evaluating the application function.

- C. The application is returning a positive health check too quickly for the AWS Management Console to respond.D- Latency in DNS resolution is interfering with Amazon EC2 metadata retrieval.

Answer: B

Explanation:

You need to have a custom health check which will evaluate the application functionality. Its not enough using the normal health checks. If the application functionality does not work and if you don't have custom health checks, the instances will still be deemed as healthy.

If you have custom health checks, you can send the information from your health checks to Auto Scaling so that Auto Scaling can use this information. For example, if you determine that an instance is not functioning as expected, you can set the health status of the instance to Unhealthy. The next time that Auto Scaling performs a health check on the instance, it will determine that the instance is unhealthy and then launch a replacement instance

For more information on Autoscaling health checks, please refer to the below document link: from AWS

http://docs.aws.amazon.com/autoscaling/latest/userguide/healthcheck.html

NEW QUESTION 6

When you add lifecycle hooks to an Autoscaling Group, what are the wait states that occur during the scale in and scale out process. Choose 2 answers from the options given below

- A. Launching:Wait

- B. Exiting:Wait

- C. Pending:Wait

- D. Terminating:Wait

Answer: CD

Explanation:

The AWS Documentation mentions the following

After you add lifecycle hooks to your Auto Scaling group, they work as follows:

1. Auto Scaling responds to scale out events by launching instances and scale in events by terminating instances.

2. Auto Scaling puts the instance into a wait state (Pending:Wait orTerminating: Wait). The instance is paused until either you tell Auto Scaling to continue or the timeout period ends.

For more information on Autoscaling Lifecycle hooks, please visit the below URL: • http://docs.aws.amazon.com/autoscaling/latest/userguide/lifecycle-hooks.htmI

NEW QUESTION 7

What is the amount of time that Opswork stacks services waits for a response from an underlying instance before deeming it as a failed instance?

- A. Iminute.

- B. 5minutes.

- C. 20minutes.

- D. 60minutes

Answer: B

Explanation:

The AWS Documentation mentions

Every instance has an AWS OpsWorks Stacks agent that communicates regularly with the service. AWS OpsWorks Stacks uses that communication to monitor instance health. If an agent does not communicate with the service for more than approximately five minutes, AWS OpsWorks Stacks considers the instance to have failed.

For more information on the Auto healing feature, please visit the below URL: http://docs.aws.amazon.com/opsworks/latest/userguide/workinginstances-auto healing.htmI

NEW QUESTION 8

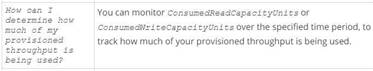

You are managing the development of an application that uses DynamoDB to store JSON data. You have already set the Read and Write capacity of the DynamoDB table. You are unsure of the amount of the traffic that will be received by the application during the deployment time. How can you ensure that the DynamoDB is not highly throttled and does not become a bottleneck for the application? Choose 2 answers from the options below.

- A. Monitorthe ConsumedReadCapacityUnits and ConsumedWriteCapacityUnits metric usingCloudwatch.

- B. Monitorthe SystemErrors metric using Cloudwatch

- C. Createa Cloudwatch alarm which would then send a trigger to AWS Lambda to increasethe Read and Write capacity of the DynamoDB table.

- D. Createa Cloudwatch alarm which would then send a trigger to AWS Lambda to create anew DynamoDB table.

Answer: AC

Explanation:

Refer to the following AWS Documentation that specifies what should be monitored for a DynamoDB table.

For more information on monitoring DynamoDB please seethe below link:

• http://docs.aws.a mazon.com/amazondynamodb/latest/developerguide/monitori ng- cloudwatch.html

NEW QUESTION 9

Your security officer has told you that you need to tighten up the logging of all events that occur on your AWS account. He wants to be able to access all events that occur on the account across all regions quickly and in the simplest way possible. He also wants to make sure he is the only person that has access to these events in the most secure way possible. Which of the following would be the best solution to assure his requirements are met? Choose the correct answer from the options below

- A. Use CloudTrail to logall events to one S3 bucke

- B. Make this S3 bucket only accessible by your security officer with a bucket policy that restricts access to his user only and also add MFA to the policy for a further level of securit

- C. ^/

- D. Use CloudTrail to log all events to an Amazon Glacier Vaul

- E. Make sure the vault access policy only grants access to the security officer's IP address.

- F. Use CloudTrail to send all API calls to CloudWatch and send an email to the security officer every time an API call is mad

- G. Make sure the emails are encrypted.

- H. Use CloudTrail to log all events to a separate S3 bucket in each region as CloudTrail cannot write to a bucket in a different regio

- I. Use MFA and bucket policies on all the different buckets.

Answer: A

Explanation:

AWS CloudTrail is a service that enables governance, compliance, operational auditing, and risk auditing of your AWS account. With CloudTrail, you can log,

continuously monitor, and retain events related to API calls across your AWS infrastructure. CloudTrail provides a history of AWS API calls for your account, including API calls made through the AWS Management Console, AWS SDKs, command line tools, and other AWS services. This history simplifies security analysis, resource change tracking, and troubleshooting.

You can design cloudtrail to send all logs to a central S3 bucket. For more information on cloudtrail, please visit the below URL:

◆ https://aws.amazon.com/cloudtrail/

NEW QUESTION 10

Your company is concerned with EBS volume backup on Amazon EC2 and wants to ensure they have proper backups and that the data is durable. What solution would you implement and why? Choose the correct answer from the options below

- A. ConfigureAmazon Storage Gateway with EBS volumes as the data source and store thebackups on premise through the storage gateway

- B. Writea cronjob on the server that compresses the data that needs to be backed upusing gzip compression, then use AWS CLI to copy the data into an S3 bucket for durability

- C. Usea lifecycle policy to back up EBS volumes stored on Amazon S3 for durability

- D. Writea cronjob that uses the AWS CLI to take a snapshot of production EBS volume

- E. The data is durable because EBS snapshots are stored on the Amazon S3 standard storage class

Answer: D

Explanation:

You can take snapshots of CBS volumes and to automate the process you can use the CLI. The snapshots are automatically stored on S3 for durability.

For more information on CBS snapshots, please refer to the below link: http://docs.aws.amazon.com/AWSCC2/latest/UserGuide/CBSSnapshots.html

NEW QUESTION 11

Your company has the requirement to set up instances running as part of an Autoscaling Group. Part of the requirement is to use Lifecycle hooks to setup custom based software's and do the necessary configuration on the instances. The time required for this setup might take an hour, or might finish before the hour is up. How should you setup lifecycle hooks for the Autoscaling Group. Choose 2 ideal actions you would include as part of the lifecycle hook.

- A. Configure the lifecycle hook to record heartbeat

- B. If the hour is up, restart the timeout period.

- C. Configure the lifecycle hook to record heartbeat

- D. If the hour is up, choose to terminate the current instance and start a new one

- E. Ifthe software installation and configuration is complete, then restart the time period.

- F. If the software installation and configuration is complete, then send a signal to complete the launch of the instance.

Answer: AD

Explanation:

The AWS Documentation provides the following information on lifecycle hooks

By default, the instance remains in a wait state for one hour, and then Auto Scaling continues the launch or terminate process (Pending: Proceed or Terminating: Proceed). If you need more time, you can restart the timeout period by recording a heartbeat. If you finish before the timeout period ends, you can complete the lifecycle action, which continues the launch or termination process

For more information on AWS Lifecycle hooks, please visit the below URL:

• http://docs.aws.amazon.com/autoscaling/latest/userguide/lifecycle-hooks.html

NEW QUESTION 12

You currently have a set of instances running on your Opswork stacks. You need to install security updates on these servers. What does AWS recommend in terms of how the security updates should be deployed?

Choose 2 answers from the options given below.

- A. Createand start new instances to replace your current online instance

- B. Then deletethe current instances.

- C. Createa new Opswork stack with the new instances.

- D. OnLinux-based instances in Chef 11.10 or older stacks, run the UpdateDependencies stack command.

- E. Create a cloudformation template which can be used to replace the instances.

Answer: AC

Explanation:

The AWS Documentation mentions the following

By default, AWS OpsWorks Stacks automatically installs the latest updates during setup, after an instance finishes booting. AWS OpsWorks Stacks does not automatically install updates after an instance is online, to avoid interruptions such as restarting application servers. Instead, you manage updates to your online instances yourself, so you can minimize any disruptions.

We recommend that you use one of the following to update your online instances.

Create and start new instances to replace your current online instances. Then delete the current instances. The new instances will have the latest set of security patches installed during setup.

On Linux-based instances in Chef 11.10 or older stacks, run the Update Dependencies stack command, which installs the current set of security patches and other updates on the specified instances.

For more information on Opswork updates, please visit the below url • http://docs.aws.amazon.com/opsworks/latest/userguide/best-practices-updates. htmI

NEW QUESTION 13

Your company is planning to setup a wordpress application. The wordpress application will connect to a MySQL database. Part of the requirement is to ensure that the database environment is fault

tolerant and highly available. Which of the following 2 options individually can help fulfil this requirement.

- A. Create a MySQL RDS environment with Multi-AZ feature enabled

- B. Create a MySQL RDS environment and create a Read Replica

- C. Create multiple EC2 instances in the same A

- D. Host MySQL and enable replication via scripts between the instances.

- E. Create multiple EC2 instances in separate AZ'

- F. Host MySQL and enable replication via scripts between the instances.

Answer: AD

Explanation:

One way to ensure high availability and fault tolerant environments is to ensure Instances are located across multiple availability zones. Hence if you are hosting

MySQL yourself, ensure you have instances spread across multiple AZ's

The AWS Documentation mentions the following about the multi-AZ feature

Amazon RDS provides high availability and failover support for DB instances using Multi-AZ deployments. Amazon RDS uses several different technologies to provide failover support. Multi-AZ deployments for Oracle, PostgreSGL, MySQL, and MariaDB DB instances use Amazon's failover technology

For more information on AWS Multi-AZ deployments, please visit the below URL: http://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/Concepts.MultiAZ.html

NEW QUESTION 14

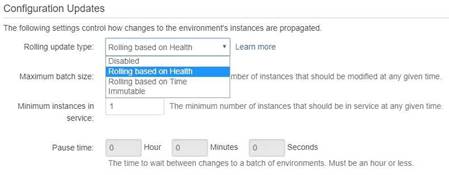

Which of the following is not a rolling type update which is present for Configuration Updates when it comes to the Elastic Beanstalk service

- A. Rolling based on Health

- B. Rolling based on Instances

- C. Immutable

- D. Rolling based on time

Answer: B

Explanation:

When you go to the configuration of your Elastic Beanstalk environment, below are the updates that are possible

The AWS Documentation mentions

1) With health-based rolling updates. Elastic Beanstalk waits until instances in a batch pass health checks before moving on to the next batch.

2) For time-based rolling updates, you can configure the amount of time that Elastic Beanstalk waits after completing the launch of a batch of instances before moving on to the next batch. This pause time allows your application to bootsrap and start serving requests.

3) Immutable environment updates are an alternative to rolling updates that ensure that configuration changes that require replacing instances are applied efficiently and safely. If an immutable environment update fails, the rollback process requires only terminating an Auto Scalinggroup. A failed rolling update, on the other hand, requires performing an additional rolling update to roll back the changes.

For more information on Rolling updates for Elastic beanstalk configuration updates, please visit the below URL:

• http://docs.aws.amazon.com/elasticbeanstalk/latest/dg/using-features.ro11ingupdates.html

NEW QUESTION 15

The project you are working on currently uses a single AWS CloudFormation template to deploy its AWS infrastructure, which supports a multi-tier web application. You have been tasked with organizing the AWS CloudFormation resources so that they can be maintained in the future, and so that different departments such as Networking and Security can review the architecture before it goes to Production. How should you do this in a way that accommodates each department, using their existing workflows?

- A. Organize the AWS CloudFormation template so that related resources are next to each other in the template, such as VPC subnets and routing rules for Networkingand security groups and 1AM information for Security.

- B. Separate the AWS CloudFormation template into a nested structure that has individual templates for the resources that are to be governed by different departments, and use the outputs from the networking and security stacks for the application template that you contro

- C. ^/

- D. Organize the AWS CloudFormation template so that related resources are next to each other in the template for each department's use, leverage your existing continuous integration tool to constantly deploy changes from all parties to the Production environment, and then run tests for validation.

- E. Use a custom application and the AWS SDK to replicate the resources defined in the current AWS CloudFormation template, and use the existing code review system to allow other departments to approve changes before altering the application for future deployments.

Answer: B

Explanation:

As your infrastructure grows, common patterns can emerge in which you declare the same components in each of your templates. You can separate out these common components and create dedicated templates for them. That way, you can mix and match different templates but use nested stacks to create a single, unified stack. Nested stacks are stacks that create other stacks. To create nested stacks, use the AWS:: Cloud Form ation::Stackresource in your template to reference other templates.

For more information on best practices for Cloudformation please refer to the below link: http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/best-practices.html

NEW QUESTION 16

You have implemented a system to automate deployments of your configuration and application dynamically after an Amazon EC2 instance in an Auto Scaling group is launched. Your system uses a configuration management tool that works in a standalone configuration, where there is no master node. Due to the volatility of application load, new instances must be brought into service within three minutes of the launch of the instance operating system. The deployment stages take the following times to complete:

1) Installing configuration management agent: 2mins

2) Configuring instance using artifacts: 4mins

3) Installing application framework: 15mins

4) Deploying application code: 1min

What process should you use to automate the deployment using this type of standalone agent configuration?

- A. Configureyour Auto Scaling launch configuration with an Amazon EC2 UserData script toinstall the agent, pull configuration artifacts and application code from anAmazon S3 bucket, and then execute the agent to configure the infrastructureand application.

- B. Builda custom Amazon Machine Image that includes all components pre-installed,including an agent, configuration artifacts, application frameworks, and code.Create a startup script that executes the agent to configure the system onstartu

- C. *t

- D. Builda custom Amazon Machine Image that includes the configuration management agentand application framework pre-installed.Configure your Auto Scaling launchconfiguration with an Amazon EC2 UserData script to pull configurationartifacts and application code from an Amazon S3 bucket, and then execute theagent toconfigure the system.

- E. Createa web service that polls the Amazon EC2 API to check for new instances that arelaunched in an Auto Scaling grou

- F. When it recognizes a new instance, execute aremote script via SSH to install the agent, SCP the configuration artifacts andapplication code, and finally execute the agent to configure the system

Answer: B

Explanation:

Since the new instances need to be brought up in 3 minutes, hence the best option is to pre-bake all the components into an AMI. If you try to user the User Data option, it will just take time, based on the time mentioned in the question to install and configure the various components.

For more information on AMI design please see the below link:

• https://aws.amazon.com/answers/configuration-management/aws-ami-design/

NEW QUESTION 17

When thinking of AWS Elastic Beanstalk's model, which is true?

- A. Applications have many deployments, deployments have many environments.

- B. Environments have many applications, applications have many deployments.

- C. Applications have many environments, environments have many deployments.

- D. Deployments have many environments, environments have many applications.

Answer: C

Explanation:

The first step in using Elastic Beanstalk is to create an application, which represents your web application in AWS. In Elastic Beanstalk an application serves as a

container for the environments that run your web app, and versions of your web app's source code, saved configurations, logs and other artifacts that you create

while using Elastic Beanstalk.

For more information on Applications, please refer to the below link: http://docs.aws.amazon.com/elasticbeanstalk/latest/dg/applications.html

Deploying a new version of your application to an environment is typically a fairly quick process. The new source bundle is deployed to an instance and extracted, and the the web container or application server picks up the new version and restarts if necessary. During deployment, your application might still become unavailable to users for a few seconds. You can prevent this by configuring your environment to use rolling deployments to deploy the new version to instances in batches. For more information on deployment, please refer to the below link: http://docs.aws.amazon.com/elasticbeanstalk/latest/dg/using-features.de ploy-existing-version, html

NEW QUESTION 18

Which of the following Deployment types are available in the CodeDeploy service. Choose 2 answers from the options given below

- A. In-place deployment

- B. Rolling deployment

- C. Immutable deployment

- D. Blue/green deployment

Answer: AD

Explanation:

The following deployment types are available

1. In-place deployment: The application on each instance in the deployment group is stopped, the latest application revision is installed, and the new version of the application is started and validated.

2. Blue/green deployment: The instances in a deployment group (the original environment) are replaced by a different set of instances (the replacement environment)

For more information on Code Deploy please refer to the below link:

• http://docs.aws.amazon.com/codedeploy/latest/userguide/primary-components.html

NEW QUESTION 19

Which of the below services can be used to deploy application code content stored in Amazon S3 buckets, GitHub repositories, or Bitbucket repositories

- A. CodeCommit

- B. CodeDeploy

- C. S3Lifecycles

- D. Route53

Answer: B

Explanation:

The AWS documentation mentions

AWS CodeDeploy is a deployment service that automates application deployments to Amazon EC2 instances or on-premises instances in your own facility.

For more information on Code Deploy please refer to the below link:

• http://docs.ws.amazon.com/codedeploy/latest/userguide/welcome.html

NEW QUESTION 20

You are Devops Engineer for a large organization. The company wants to start using Cloudformation templates to start building their resources in AWS. You are getting requirements for the templates from various departments, such as the networking, security, application etc. What is the best way to architect these Cloudformation templates.

- A. Usea single Cloudformation template, since this would reduce the maintenanceoverhead on the templates itself.

- B. Createseparate logical templates, for example, a separate template for networking,security, application et

- C. Then nest the relevant templates.

- D. Considerusing Elastic beanstalk to create your environments since Cloudformation is notbuilt for such customization.

- E. Considerusing Opsworks to create your environments since Cloudformation is not builtfor such customization.

Answer: B

Explanation:

The AWS documentation mentions the following

As your infrastructure grows, common patterns can emerge in which you declare the same components in each of your templates. You can separate out these

common components and create dedicated templates for them. That way, you can mix and match different templates but use nested stacks to create a single, unified stack. Nested stacks are stacks that create other stacks. To create nested stacks, use the AWS::

Cloud Form ation::Stackresource in your template to reference other templates.

For more information on Cloudformation best practises, please visit the below url http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/best-practices.html

NEW QUESTION 21

Your application requires long-term storage for backups and other data that you need to keep readily available but with lower cost. Which S3 storage option should you use?

- A. AmazonS3 Standard- Infrequent Access

- B. S3Standard

- C. Glacier

- D. ReducedRedundancy Storage

Answer: A

Explanation:

The AWS Documentation mentions the following

Amazon S3 Standard - Infrequent Access (Standard - IA) is an Amazon S3 storage class for data that is accessed less frequently, but requires rapid access when needed. Standard - IA offers the high durability, throughput, and low latency of Amazon S3 Standard, with a low per GB storage price and per GB retrieval fee.

For more information on S3 Storage classes, please visit the below URL:

• https://aws.amazon.com/s3/storage-classes/

NEW QUESTION 22

You have a large number of web servers in an Auto Scalinggroup behind a load balancer. On an hourly basis, you want to filter and process the logs to collect data on unique visitors, and then put that data in a durable data store in order to run reports. Web servers in the Auto Scalinggroup are constantly launching and terminating based on your scaling policies, but you do not want to lose any of the log data from these servers during a stop/termination initiated by a user or by Auto Scaling. What two approaches will meet these requirements? Choose two answers from the optionsgiven below.

- A. Install an Amazon Cloudwatch Logs Agent on every web server during the bootstrap proces

- B. Create a CloudWatch log group and defineMetric Filters to create custom metrics that track unique visitors from the streaming web server log

- C. Create a scheduled task on an Amazon EC2 instance that runs every hour to generate a new report based on the Cloudwatch custom metric

- D. ^/

- E. On the web servers, create a scheduled task that executes a script to rotate and transmit the logs to Amazon Glacie

- F. Ensure that the operating system shutdown procedure triggers a logs transmission when the Amazon EC2 instance is stopped/terminate

- G. Use Amazon Data Pipeline to process the data in Amazon Glacier and run reports every hour.

- H. On the web servers, create a scheduled task that executes a script to rotate and transmit the logs to an Amazon S3 bucke

- I. Ensure that the operating system shutdown procedure triggers a logs transmission when the Amazon EC2 instance is stopped/terminate

- J. Use AWS Data Pipeline to move log data from the Amazon S3 bucket to Amazon Redshift In order to process and run reports every hour.

- K. Install an AWS Data Pipeline Logs Agent on every web server during the bootstrap proces

- L. Create a log group object in AWS Data Pipeline, and define Metric Filters to move processed log data directly from the web servers to Amazon Redshift and run reports every hour.

Answer: AC

Explanation:

You can use the Cloud Watch Logs agent installer on an existing CC2 instance to install and configure the Cloud Watch Logs agent.

For more information, please visit the below link:

• http://docs.aws.amazon.com/AmazonCloudWatch/latest/logs/Qu ickStartCC2lnstance.html

You can publish your own metrics to Cloud Watch using the AWS CLI or an API. For more information, please visit the below link:

• http://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/publishingMetrics.htmI Amazon Redshift is a fast, fully managed data warehouse that makes it simple and cost-effective to analyze all your data using standard SQL and your existing Business Intelligence (Bl) tools. It allows you to run complex analytic queries against petabytes of structured data, using sophisticated query optimization, columnar storage on high-performance local disks, and massively parallel query execution. Most results come back in seconds. For more information on copying data from S3 to redshift, please refer to the below link:

• http://docs.aws.amazon.com/datapipeline/latest/DeveloperGuide/dp-copydata- redshift html

NEW QUESTION 23

You need to create a Route53 record automatically in CloudFormation when not running in production during all launches of a Template. How should you implement this?

- A. Use a Parameter for environment, and add a Condition on the Route53 Resource in the template to create the record only when environment is not production.

- B. Create two templates, one with the Route53 record value and one with a null value for the recor

- C. Use the one without it when deploying to production.

- D. Use a Parameterfor environment, and add a Condition on the Route53 Resource in the template to create the record with a null string when environment is production.

- E. Create two templates, one with the Route53 record and one without i

- F. Use the one without it when deploying to production.

Answer: A

Explanation:

The optional Conditions section includes statements that define when a resource is created or when a property is defined. For example, you can compare whether a value is equal to another value. Based on the result of that condition, you can conditionally create resources. If you have multiple conditions, separate them with commas.

You might use conditions when you want to reuse a template that can create resources in different contexts, such as a test environment versus a production environment In your template, you can add an Environ me ntType input parameter, which accepts either prod or test as inputs. For the production environment, you might include Amazon CC2 instances with certain capabilities; however, for the test environment, you want to use reduced capabilities to save money. With conditions, you can define which resources are created and how they're configured for each environment type.

For more information on Cloudformation conditions please refer to the below link: http://docs.ws.amazon.com/AWSCIoudFormation/latest/UserGuide/cond itions-section- structure.htm I

NEW QUESTION 24

Your company has multiple applications running on AWS. Your company wants to develop a tool that notifies on-call teams immediately via email when an alarm is triggered in your environment. You have multiple on-call teams that work different shifts, and the tool should handle notifying the correct teams at the correct times. How should you implement this solution?

- A. Create an Amazon SNS topic and an Amazon SQS queu

- B. Configure the Amazon SQS queue as a subscriber to the Amazon SNS topic.Configure CloudWatch alarms to notify this topic when an alarm is triggere

- C. Create an Amazon EC2 Auto Scaling group with both minimum and desired Instances configured to 0. Worker nodes in thisgroup spawn when messages are added to the queu

- D. Workers then use Amazon Simple Email Service to send messages to your on call teams.

- E. Create an Amazon SNS topic and configure your on-call team email addresses as subscriber

- F. Use the AWS SDK tools to integrate your application with Amazon SNS and send messages to this new topi

- G. Notifications will be sent to on-call users when a CloudWatch alarm is triggered.

- H. Create an Amazon SNS topic and configure your on-call team email addresses as subscriber

- I. Create a secondary Amazon SNS topic for alarms and configure your CloudWatch alarms to notify this topic when triggere

- J. Create an HTTP subscriber to this topic that notifies your application via HTTP POST when an alarm is triggere

- K. Use the AWS SDK tools to integrate your application with Amazon SNS and send messages to the first topic so that on-call engineers receive alerts.

- L. Create an Amazon SNS topic for each on-call group, and configure each of these with the team member emails as subscriber

- M. Create another Amazon SNS topic and configure your CloudWatch alarms to notify this topic when triggere

- N. Create an HTTP subscriber to this topic that notifies your application via HTTP POST when an alarm is triggere

- O. Use the AWS SDK tools to integrate your application with Amazon SNS and send messages to the correct team topic when on shift.

Answer: D

Explanation:

Option D fulfils all the requirements

1) First is to create a SNS topic for each group so that the required members get the email addresses.

2) Ensure the application uses the HTTPS endpoint and the SDK to publish messages Option A is invalid because the SQS service is not required.

Option B and C are incorrect. As per the requirement we need to provide notification to only those on-call teams who are working in that particular shift when an alarm is triggered. It need not have to be send to all the on-call teams of the company. With Option B & C, since we are not configuring the SNS topic for each on call team the notifications will be send to all the on-call teams. Hence these 2 options are invalid. For more information on setting up notifications, please refer to the below document link: from AWS http://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/US_SetupSNS.html

NEW QUESTION 25

Your company wants to understand where cost is coming from in the company's production AWS account. There are a number of applications and services running at any given time. Without expending too much initial development time.how best can you give the business a good understanding of which applications cost the most per month to operate?

- A. Create an automation script which periodically creates AWS Support tickets requesting detailed intra-month information about your bill.

- B. Use custom CloudWatch Metrics in your system, and put a metric data point whenever cost is incurred.

- C. Use AWS Cost Allocation Taggingfor all resources which support i

- D. Use the Cost Explorer to analyze costs throughout the month.

- E. Use the AWS Price API and constantly running resource inventory scripts to calculate total price based on multiplication of consumed resources over time.

Answer: C

Explanation:

A tag is a label that you or AWS assigns to an AWS resource. Each tag consists of a Areyand a value. A key can have more than one value. You can use tags to organize your resources, and cost allocation tags to track your AWS costs on a detailed level. After you activate cost allocation tags, AWS uses the cost allocation tags to organize your resource costs on your cost allocation report, to make it easier

for you to categorize and track your AWS costs. AWS provides two types of cost allocation tags, an A WS-generated tagand user-defined tags. AWS defines, creates, and applies the AWS-generated tag for you, and you define, create, and apply user-defined tags. You must activate both types of tags separately before they can appear in Cost Explorer or on a cost allocation report.

For more information on Cost Allocation tags, please visit the below URL: http://docs.aws.amazon.com/awsaccountbilling/latest/aboutv2/cost-alloctags.html

NEW QUESTION 26

......

Thanks for reading the newest DOP-C01 exam dumps! We recommend you to try the PREMIUM Allfreedumps.com DOP-C01 dumps in VCE and PDF here: https://www.allfreedumps.com/DOP-C01-dumps.html (116 Q&As Dumps)