Exam Code: DOP-C01 (Practice Exam Latest Test Questions VCE PDF)

Exam Name: AWS Certified DevOps Engineer- Professional

Certification Provider: Amazon-Web-Services

Free Today! Guaranteed Training- Pass DOP-C01 Exam.

Online DOP-C01 free questions and answers of New Version:

NEW QUESTION 1

You are building a mobile app for consumers to post cat pictures online. You will be storing the images in AWS S3. You want to run the system very cheaply and simply. Which one of these options allows you to build a photo sharing application with the right authentication/authorization implementation.

- A. Build the application out using AWS Cognito and web identity federation to allow users to log in using Facebook or Google Account

- B. Once they are logged in, the secret token passed to that user is used to directly access resources on AWS, like AWS S3. ^/

- C. Use JWT or SAML compliant systems to build authorization policie

- D. Users log in with a username and password, and are given a token they can use indefinitely to make calls against the photo infrastructure.C Use AWS API Gateway with a constantly rotating API Key to allow access from the client-sid

- E. Construct a custom build of the SDK and include S3 access in it.

- F. Create an AWS oAuth Service Domain ad grant public signup and access to the domai

- G. During setup, add at least one major social media site as a trusted Identity Provider for users.

Answer: A

Explanation:

Amazon Cognito lets you easily add user sign-up and sign-in and manage permissions for your mobile and web apps. You can create your own user directory within Amazon Cognito. You can also choose to authenticate users through social identity providers such as Facebook, Twitter, or Amazon; with SAML identity solutions; or by using your own identity system. In addition, Amazon Cognito enables you to save data locally on users' devices, allowing your applications to work even when the devices are offline. You can then synchronize data across users' devices so that their app experience remains consistent regardless of the device they use.

For more information on AWS Cognito, please visit the below URL:

• http://docs.aws.amazon.com/cognito/latest/developerguide/what-is-amazon-cognito.html

NEW QUESTION 2

Your development team is using access keys to develop an application that has access to S3 and DynamoDB. A new security policy has outlined that the credentials should not be older than 2 months, and should be rotated. How can you achieve this

- A. Use the application to rotate the keys in every 2 months via the SDK

- B. Use a script which will query the date the keys are create

- C. If older than 2 months, delete them and recreate new keys

- D. Delete the user associated with the keys after every 2 month

- E. Then recreate the user again.D- Delete the I AM Role associated with the keys after every 2 month

- F. Then recreate the I AM Roleagain.

Answer: B

Explanation:

One can use the CLI command list-access-keys to get the access keys. This command also returns the "CreateDate" of the keys. If the CreateDate is older than 2 months, then the keys can be deleted.

The Returns list-access-keys CLI command returns information about the access key IDs associated with the specified I AM user. If there are none, the action returns

an empty list.

For more information on the CLI command, please refer to the below link: http://docs.aws.amazon.com/cli/latest/reference/iam/list-access-keys.html

NEW QUESTION 3

Your application's Auto Scaling Group scales up too quickly, too much, and stays scaled when traffic decreases. What should you do to fix this?

- A. Set a longer cooldown period on the Group, so the system stops overshooting the target capacit

- B. The issue is that the scaling system doesn't allow enough time for new instances to begin servicing requests before measuring aggregate load again.

- C. Calculate the bottleneck or constraint on the compute layer, then select that as the new metric, and set the metric thresholds to the bounding values that begin to affect response latency.

- D. Raise the CloudWatch Alarms threshold associated with your autoscaling group, so the scaling takes more of an increase in demand before beginning.

- E. Use larger instances instead of lots of smaller ones, so the Group stops scaling out so much and wasting resources as the OS level, since the OS uses a higher proportion of resources on smaller instances.

Answer: B

Explanation:

The ideal case is that the right metric is not being used for the scale up and down.

Option A is not valid because it mentions that the cooldown is not happening when the traffic decreases, that means the metric threshold for the scale down is not occurring in Cloudwatch

Option C is not valid because increasing the Cloudwatch alarm metric will not ensure that the instances scale down when the traffic decreases.

Option D is not valid because the question does not mention any constraints that points to the instance size. For an example on using custom metrics for scaling in and out, please follow the below link for a use case.

• https://blog.powerupcloud.com/aws-autoscaling-based-on-database-query-custom-metrics- f396c16e5e6a

NEW QUESTION 4

Your development team is developing a mobile application that access resources in AWS. The users accessing this application will be logging in via Facebook and Google. Which of the following AWS mechanisms would you use to authenticate users for the application that needs to access AWS resou rces

- A. Useseparate 1AM users that correspond to each Facebook and Google user

- B. Useseparate 1AM Roles that correspond to each Facebook and Google user

- C. UseWeb identity federation to authenticate the users

- D. UseAWS Policies to authenticate the users

Answer: C

Explanation:

The AWS documentation mentions the following

You can directly configure individual identity providers to access AWS resources using web identity federation. AWS currently supports authenticating users using web identity federation through several identity providers: Login with Amazon

Facebook Login

Google Sign-in For more information on Web identity federation please visit the below URL:

• http://docs.aws.amazon.com/sdk-for-javascript/v2/developer-guide/load ing-browser- credentials-federated-id.htm I

NEW QUESTION 5

You've been tasked with building out a duplicate environment in another region for disaster recovery purposes. Part of your environment relies on EC2 instances with preconfigured software. What steps would you take to configure the instances in another region? Choose the correct answer from the options below

- A. Createan AMI oftheEC2 instance

- B. CreateanAMIoftheEC2instanceandcopytheAMItothedesiredregion

- C. Makethe EC2 instance shareable among other regions through 1AM permissions

- D. Noneof the above

Answer: B

Explanation:

You can copy an Amazon Machine Image (AMI) within or across an AWS region using the AWS Management Console, the AWS command line tools or SDKs, or the Amazon CC2 API, all of which support the Copylmage action. You can copy both Amazon CBS-backed AM Is and instance store-backed AM Is. You can copy AMIs with encrypted snapshots and encrypted AMIs.

For more information on copying AMI's, please refer to the below link:

• http://docs.aws.amazon.com/AWSCC2/latest/UserGuide/CopyingAMIs.htTTil

NEW QUESTION 6

Your current log analysis application takes more than four hours to generate a report of the top 10 users of your web application. You have been asked to implement a system that can report this information in real time, ensure that the report is always up to date, and handle increases in the number of requests to your web application. Choose the option that is cost-effective and can fulfill the requirements.

- A. Publish your data to Cloud Watch Logs, and configure your application to autoscale to handle the load on demand.

- B. Publish your log data to an Amazon S3 bucke

- C. Use AWS CloudFormation to create an Auto Scalinggroup to scale your post-processing application which is configured to pull down your log files stored an Amazon S3.

- D. Post your log data to an Amazon Kinesis data stream, and subscribe your log-processing application so that is configured to process your logging data.

- E. Create a multi-AZ Amazon RDS MySQL cluster, post the logging data to MySQL, and run a map reduce job to retrieve the required information on user counts.

Answer: C

Explanation:

When you see Amazon Kinesis as an option, this becomes the ideal option to process data in real time.

Amazon Kinesis makes it easy to collect, process, and analyze real-time, streaming data so you can get timely insights and react quickly to new information. Amazon

Kinesis offers key capabilities to cost effectively process streaming data at any scale, along with the flexibility to choose the tools that best suit the requirements of

your application. With Amazon Kinesis, you can ingest real-time data such as application logs, website clickstreams, loT telemetry data, and more into your

databases, data lakes and data warehouses, or build your own real-time applications using this data. For more information on Amazon Kinesis, please visit the below URL:

• https://aws.amazon.com/kinesis

NEW QUESTION 7

As part of your deployment process, you are configuring your continuous integration (CI) system to build AMIs. You want to build them in an automated manner that is also cost-efficient. Which method should you use?

- A. Attachan Amazon EBS volume to your CI instance, build the root file system of yourimage on the volume, and use the Createlmage API call to create an AMI out ofthis volume.

- B. Havethe CI system launch a new instance, bootstrap the code and apps onto theinstance and create an AMI out of it.

- C. Uploadall contents of the image to Amazon S3 launch the base instance, download allof the contents from Amazon S3 and create the AMI.

- D. Havethe CI system launch a new spot instance bootstrap the code and apps onto theinstance and create an AMI out of it.

Answer: D

Explanation:

The AWS documentation mentions the following

If your organization uses Jenkins software in a CI/CD pipeline, you can add Automation as a post- build step to pre-install application releases into Amazon Machine Images (AMIs). You can also use the Jenkins scheduling feature to call Automation and create your own operating system (OS) patching cadence

For more information on Automation with Jenkins, please visit the link:

• http://docs.aws.a mazon.com/systems-manager/latest/userguide/automation-jenkinsJntm I

• https://wiki.jenkins.io/display/JCNKINS/Amazon < CC21 Plugin

NEW QUESTION 8

The operations team and the development team want a single place to view both operating system and application logs. How should you implement this using A WS services? Choose two from the options below

- A. Using AWS CloudFormation, create a Cloud Watch Logs LogGroup and send the operating system and application logs of interest using the Cloud Watch Logs Agent.

- B. Using AWS CloudFormation and configuration management, set up remote logging to send events via UDP packets to CloudTrail.

- C. Using configuration management, set up remote logging to send events to Amazon Kinesis and insert these into Amazon CloudSearch or Amazon Redshift, depending on available analytic tools.

- D. Using AWS CloudFormation, merge the application logs with the operating system logs, and use 1AM Roles to allow both teams to have access to view console output from Amazon EC2.

Answer: AC

Explanation:

Option B is invalid because Cloudtrail is not designed specifically to take in UDP packets

Option D is invalid because there are already Cloudwatch logs available, so there is no need to have specific logs designed for this.

You can use Amazon CloudWatch Logs to monitor, store, and access your log files from Amazon Elastic Compute Cloud (Amazon L~C2) instances, AWS CloudTrail,

and other sources. You can then retrieve the associated log data from CloudWatch Logs. For more information on Cloudwatch logs please refer to the below link:

http://docs^ws.amazon.com/AmazonCloudWatch/latest/logs/WhatlsCloudWatchLogs.html You can the use Kinesis to process those logs

For more information on Amazon Kinesis please refer to the below link: http://docs.aws.a mazon.com/streams/latest/dev/introduction.html

NEW QUESTION 9

Which of the following commands for the elastic beanstalk CLI can be used to create the current application into the specified environment?

- A. ebcreate

- B. ebstart

- C. enenv

- D. enapp

Answer: A

Explanation:

Differences from Version 3 of EB CLI

CB is a command line interface (CLI) tool for Clastic Beanstalk that you can use to deploy applications quickly and more easily. The latest version of CB was introduced by Clastic Beanstalk in CB CLI 3. Although Clastic Beanstalk still supports CB 2.6 for customers who previously installed and continue to use it, you should migrate to the latest version of CB CLI 3, as it can manage environments that you launched using CB CLI 2.6 or earlier versions of CB CLI. CB CLI automatically retrieves settings from an environment created using CB if the environment is running. Note that CB CLI 3 does not store option settings locally, as in earlier versions.

CB CLI introduces the commands eb create, eb deploy, eb open, eb console, eb scale, eb setenv, eb config, eb terminate, eb clone, eb list, eb use, eb printenv, and eb ssh. In CB CLI 3.1 or later, you can also use the eb swap command. In CB CLI 3.2 only, you can use the eb abort, eb platform, and eb upgrade commands. In addition to these new commands, CB CLI 3 commands differ from CB CLI 2.6 commands in several cases:

1. eb init - Use eb init to create an .elasticbeanstalk directory in an existing project directory and create a new Clastic Beanstalk application for the project. Unlike with previous versions, CB CLI 3 and later versions do not prompt you to create an environment.

2. eb start - CB CLI 3 does not include the command eb start. Use eb create to create an environment.

3. eb stop - CB CLI 3 does not include the command eb stop. Use eb terminate to completely terminate an environment and clean up.

4. eb push and git aws.push - CB CLI 3 does not include the commands eb push or git aws.push. Use eb deploy to update your application code.

5. eb update - CB CLI 3 does not include the command eb update. Use eb config to update an environment.

6. eb branch - CB CLI 3 does not include the command eb branch.

For more information about using CB CLI 3 commands to create and manage an application, see CB CLI Command Reference. For a command reference for CB 2.6, see CB CLI 2 Commands. For a walkthrough of how to deploy a sample application using CB CLI 3, see Managing Clastic Beanstalk environments with the CB CLI. For a walkthrough of how to deploy a sample application using eb 2.6, see Getting Started with Cb. For a walkthrough of how to use CB 2.6 to map a Git branch to a specific environment, see Deploying a Git Branch to a Specific environment. https://docs.aws.amazon.com/elasticbeanstalk/latest/dg/eb-cli. html #eb-cli2-differences Note: Additionally, CB CLI 2.6 has been deprecated. It has been replaced by AWS CLI https://docs.aws.amazon.com/elasticbeanstalk/latest/dg/eb-cl i3.htm I We will replace this question soon.

NEW QUESTION 10

You have an AWS OpsWorks Stack running Chef Version 11.10. Your company hosts its own proprietary cookbook on Amazon S3, and this is specified as a custom cookbook in the stack. You want to use an open-source cookbook located in an external Git repository. What tasks should you perform to enable the use of both custom cookbooks?

- A. Inthe AWS OpsWorks stack settings, enable Berkshel

- B. Create a new cookbook with aBerksfile that specifies the other two cookbook

- C. Configure the stack to usethis new cookbook.

- D. Inthe OpsWorks stack settings add the open source project's cookbook details inaddition to your cookbook.

- E. Contactthe open source project's maintainers and request that they pull your cookbookinto their

- F. Update the stack to use their cookbook.

- G. Inyour cookbook create an S3 symlink object that points to the open sourceproject's cookbook.

Answer: A

Explanation:

To use an external cookbook on an instance, you need a way to install it and manage any dependencies. The preferred approach is to implement a cookbook that supports a dependency manager named Berkshelf. Berkshelf works on Amazon CC2 instances, including AWS OpsWorks Stacks instances, but it is also designed to work with Test Kitchen and Vagrant.

For more information on Opswork and Berkshelf, please visit the link:

• http://docs.aws.a mazon.com/opsworks/latest/userguide/cookbooks-101 -opsworks- berkshelf.htm I

NEW QUESTION 11

One of your engineers has written a web application in the Go Programming language and has asked your DevOps team to deploy it to AWS. The application code is hosted on a Git repository.

What are your options? (Select Two)

- A. Create a new AWS Elastic Beanstalk application and configure a Go environment to host your application, Using Git check out the latest version of the code, once the local repository for Elastic Beanstalk is configured use "eb create" command to create an environment and then use "eb deploy" command to deploy the application.

- B. Writea Dockerf ile that installs the Go base image and uses Git to fetch yourapplicatio

- C. Create a new AWS OpsWorks stack that contains a Docker layer thatuses the Dockerrun.aws.json file to deploy your container and then use theDockerfile to automate the deployment.

- D. Writea Dockerfile that installs the Go base image and fetches your application usingGit, Create a new AWS Elastic Beanstalk application and use this Dockerfile toautomate the deployment.

- E. Writea Dockerfile that installs the Go base image and fetches your application usingGit, Create anAWS CloudFormation template that creates and associates an AWS::EC2::lnstanceresource type with an AWS::EC2::Container resource type.

Answer: AC

Explanation:

Opsworks works with Chef recipes and not with Docker containers so Option B and C are invalid. There is no AWS::CC2::Container resource for Cloudformation so Option D is invalid.

Below is the documentation on Clastic beanstalk and Docker

Clastic Beanstalk supports the deployment of web applications from Docker containers. With Docker containers, you can define your own runtime environment. You can choose your own platform, programming language, and any application dependencies (such as package managers or tools), that aren't supported by other platforms. Docker containers are self-contained and include all the configuration information and software your web application requires to run.

For more information on Clastic beanstalk and Docker, please visit the link: http://docs.aws.amazon.com/elasticbeanstalk/latest/dg/create_deploy_docker.html

https://docs.aws.a mazon.com/elasticbeanstalk/latest/dg/eb-cl i3-getting-started.htmI https://docs^ws.amazon.com/elasticbeanstalk/latest/dg/eb3-cli-githtml

NEW QUESTION 12

You need your CI to build AM Is with code pre-installed on the images on every new code push. You need to do this as cheaply as possible. How do you do this?

- A. Bid on spot instances just above the asking price as soon as new commits come in, perform all instance configuration and setup, then create an AM I based on the spot instance.

- B. Have the CI launch a new on-demand EC2 instance when new commits come in, perform all instance configuration and setup, then create an AMI based on the on-demand instance.

- C. Purchase a Light Utilization Reserved Instance to save money on the continuous integration machin

- D. Use these credits whenever your create AMIs on instances.

- E. When the CI instance receives commits, attach a new EBS volume to the CI machin

- F. Perform all setup on this EBS volume so you don't need

Answer: A

Explanation:

Amazon CC2 Spot instances allow you to bid on spare Amazon CC2 computing capacity. Since Spot instances are often available at a discount compared to On-

Demand pricing, you can significantly reduce the cost of running your applications, grow your application's compute capacity and throughput for the same budget,

and enable new types of cloud computing applications.

For more information on Spot Instances, please visit the below URL: https://aws.amazon.com/ec2/spot/

NEW QUESTION 13

You have an Auto Scaling group with 2 AZs. One AZ has 4 EC2 instances and the other has 3 EC2 instances. None of the instances are protected from scale in. Based on the default Auto Scaling termination policy what will happen?

- A. Auto Scaling selects an instance to terminate randomly

- B. Auto Scaling will terminate unprotected instances in the Availability Zone with the oldest launch configuration.

- C. Auto Scaling terminates which unprotected instances are closest to the next billing hour.

- D. Auto Scaling will select the AZ with 4 EC2 instances and terminate an instance.

Answer: D

Explanation:

The default termination policy is designed to help ensure that your network architecture spans Availability Zones evenly. When using the default termination policy.

Auto Scaling selects an instance to terminate as follows:

Auto Scaling determines whether there are instances in multiple Availability Zones. If so, it selects the Availability Zone with the most instances and at least one instance that is not protected from scale in. If there is more than one Availability Zone with this number of instances. Auto Scaling selects the Availability Zone with the instances that use the oldest launch configuration. For more information on Autoscaling instance termination please refer to the below link: http://docs.aws.amazon.com/autoscaling/latest/userguide/as-instance-termination.html

NEW QUESTION 14

Which of the following CLI commands is used to spin up new EC2 Instances?

- A. awsec2 run-instances

- B. awsec2 create-instances

- C. awsec2 new-instancesD- awsec2 launch-instances

Answer: A

Explanation:

The AWS Documentation mentions the following

Launches the specified number of instances using an AMI for which you have permissions. You can specify a number of options, or leave the default options. The following rules apply:

[EC2-VPC] If you don't specify a subnet ID. we choose a default subnet from your default VPC for you. If you don't have a default VPC, you must specify a subnet ID in the request.

[EC2-Classic] If don't specify an Availability Zone, we choose one for you.

Some instance types must be launched into a VPC. if you do not have a default VPC. or if you do not specify a subnet ID. the request fails. For more information, see Instance Types Available Only in a VPC.

[EC2-VPC] All instances have a network interface with a primary private IPv4 address. If you don't specify this address, we choose one from the IPv4 range of your subnet.

Not all instance types support IPv6 addresses. For more information, see Instance Types.

If you don't specify a security group ID, we use the default security group. For more information, see Security Groups.

If any of the AMIs have a product code attached for which the user has not subscribed, the request fails. For more information on the Cc2 run instance command please refer to the below link http://docs.aws.a mazon.com/cli/latest/reference/ec2/run-instances.html

NEW QUESTION 15

A gaming company adopted AWS Cloud Formation to automate load-testing of theirgames. They have created an AWS Cloud Formation template for each gaming environment and one for the load- testing stack. The load-testing stack creates an Amazon Relational Database Service (RDS) Postgres database and two web servers running on Amazon Elastic Compute Cloud (EC2) that send HTTP requests, measure response times, and write the results into the database. A test run usually takes between 15 and 30 minutes. Once the tests are done, the AWS Cloud Formation stacks are torn down immediately. The test results written to the Amazon RDS database must remain accessible for visualization and analysis.

Select possible solutions that allow access to the test results after the AWS Cloud Formation load - testing stack is deleted.

Choose 2 answers.

- A. Define an Amazon RDS Read-Replica in theload-testing AWS Cloud Formation stack and define a dependency relation betweenmaster and replica via the Depends On attribute.

- B. Define an update policy to prevent deletionof the Amazon RDS database after the AWS Cloud Formation stack is deleted.

- C. Define a deletion policy of type Retain forthe Amazon RDS resource to assure that the RDS database is not deleted with theAWS Cloud Formation stack.

- D. Define a deletion policy of type Snapshotfor the Amazon RDS resource to assure that the RDS database can be restoredafter the AWS Cloud Formation stack is deleted.

- E. Defineautomated backups with a backup retention period of 30 days for the Amazon RDSdatabase and perform point-in-time recovery of the database after the AWS CloudFormation stack is deleted.

Answer: CD

Explanation:

With the Deletion Policy attribute you can preserve or (in some cases) backup a resource when its stack is deleted. You specify a DeletionPolicy attribute for each resource that you want to control. If a resource has no DeletionPolicy attribute, AWS Cloud Formation deletes the resource by default.

To keep a resource when its stack is deleted, specify Retain for that resource. You can use retain for any resource. For example, you can retain a nested stack, S3 bucket, or CC2 instance so that you can continue to use or modify those resources after you delete their stacks.

For more information on Deletion policy, please visit the below url http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/aws-attri bute- deletionpolicy.html

NEW QUESTION 16

Your company has an on-premise Active Directory setup in place. The company has extended their footprint on AWS, but still want to have the ability to use their on-premise Active Directory for authentication. Which of the following AWS services can be used to ensure that AWS resources such as AWS Workspaces can continue to use the existing credentials stored in the on-premise Active Directory.

- A. Use the Active Directory service on AWS

- B. Use the AWS Simple AD service

- C. Use the Active Directory connector service on AWS

- D. Use the ClassicLink feature on AWS

Answer: C

Explanation:

The AWS Documentation mentions the following

AD Connector is a directory gateway with which you can redirect directory requests to your on- premises Microsoft Active Directory without caching any information

in the cloud. AD Connector comes in two sizes, small and large. A small AD Connector is designed for

smaller organizations of up to 500 users. A large AD Connector can support larger organizations of up to 5,000 users.

For more information on the AD connector, please refer to the below URL: http://docs.aws.amazon.com/directoryservice/latest/admin-guide/directory_ad_connector.html

NEW QUESTION 17

Your company is getting ready to do a major public announcement of a social media site on AWS. The website is running on EC2 instances deployed across multiple Availability Zones with a Multi-AZ RDS MySQL Extra Large DB Instance. The site performs a high number of small reads and writes per second and relies on an eventual consistency model. After comprehensive tests you discover that there is read contention on RDS MySQL. Which are the best approaches to meet these requirements? Choose 2 answers from the options below

- A. DeployElasticCache in-memory cache running in each availability zone

- B. Implementshardingto distribute load to multiple RDS MySQL instances

- C. Increasethe RDS MySQL Instance size and Implement provisioned IOPS

- D. Addan RDS MySQL read replica in each availability zone

Answer: AD

Explanation:

Implement Read Replicas and Elastic Cache

Amazon RDS Read Replicas provide enhanced performance and durability for database (DB) instances. This replication feature makes it easy to elastically scale out beyond the capacity constraints of a single DB Instance for read-heavy database workloads. You can create one or more replicas of a given source DB Instance and serve high-volume application read traffic from multiple copies of your data, thereby increasing aggregate read throughput.

For more information on Read Replica's, please visit the below link

• https://aws.amazon.com/rds/details/read-replicas/

Amazon OastiCache is a web service that makes it easy to deploy, operate, and scale an in-memory data store or cache in the cloud. The service improves the performance of web applications by allowing you to retrieve information from fast, managed, in-memory data stores, instead of relying entirely on slower disk-based databases.

For more information on Amazon OastiCache, please visit the below link

• https://aws.amazon.com/elasticache/

NEW QUESTION 18

You are using Jenkins as your continuous integration systems for the application hosted in AWS. The builds are then placed on newly launched EC2 Instances. You want to ensure that the overall cost of the entire continuous integration and deployment pipeline is minimized. Which of the below options would meet these requirements? Choose 2 answers from the options given below

- A. Ensurethat all build tests are conducted using Jenkins before deploying the build tonewly launched EC2 Instances.

- B. Ensurethat all build tests are conducted on the newly launched EC2 Instances.

- C. Ensurethe Instances are launched only when the build tests are completed.

- D. Ensurethe Instances are created beforehand for faster turnaround time for theapplication builds to be placed.

Answer: AC

Explanation:

To ensure low cost, one can carry out the build tests on the Jenkins server itself. Once the build tests are completed, the build can then be transferred onto newly launched CC2 Instances.

For more information on AWS and Jenkins, please visit the below URL:

• https://aws.amazon.com/getting-started/projects/setup-jenkins-build-server/

Option D is incorrect. It would be right choice in case the requirement is to get better speed.

NEW QUESTION 19

You need to deploy an AWS stack in a repeatable manner across multiple environments. You have selected CloudFormation as the right tool to accomplish this, but have found that there is a resource type you need to create and model, but is unsupported by CloudFormation. How should you overcome this challenge?

- A. Use a CloudFormation Custom Resource Template by selecting an API call to proxy for create, update, and delete action

- B. CloudFormation will use the AWS SDK, CLI, or API method of your choosing as the state transition function for the resource type you are modeling.

- C. Submit a ticket to the AWS Forum

- D. AWS extends CloudFormation Resource Types by releasing tooling to the AWS Labs organization on GitHu

- E. Their response time is usually 1 day, and theycomplete requests within a week or two.

- F. Instead of depending on CloudFormation, use Chef, Puppet, or Ansible to author Heat templates, which are declarative stack resource definitions that operate over the OpenStack hypervisor and cloud environment.

- G. Create a CloudFormation Custom Resource Type by implementing create, update, and delete functionality, either by subscribing a Custom Resource Provider to an SNS topic, or by implementing the logic in AWS Lambda.

Answer: D

Explanation:

Custom resources enable you to write custom provisioning logic in templates that AWS Cloud Formation runs anytime you create, update (if you changed the custom resource), or delete stacks. For example, you might want to include resources that aren't available as AWS Cloud Formation resource types. You can include those resources by using custom resources. That way you can still manage all your related resources in a single stack.

Use the AWS:: Cloud Formation:: Custom Resource or Custom ::String resource type to define custom resources in your templates. Custom resources require one property: the service token, which specifies where AWS CloudFormation sends requests to, such as an Amazon SNS topic.

For more information on Custom Resources in Cloudformation, please visit the below U RL: http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/template-custom- resources.html

NEW QUESTION 20

You are a Devops engineerforyourcompany. You have been instructed to deploy docker containers using the Opswork service. How could you achieve this? Choose 2 answers from the options given below

- A. Usecustom cookbooks for your Opswork stack and provide the Git repository which hasthe chef recipes for the Docker container

- B. ^

- C. UseElastic beanstalk to deploy docker containers since this is not possible inOpswor

- D. Then attach the elastic beanstalk environment as a layer in Opswork.

- E. UseCloudformation to deploy docker containers since this is not possible inOpswor

- F. Then attach the Cloudformation resources as a layer in Opswork.

- G. Inthe App for Opswork deployment, specify the git url for the recipes which willdeploy the applications in the docker environment.

Answer: AD

Explanation:

This is mentioned in the AWS documentation

AWS OpsWorks lets you deploy and manage application of all shapes and sizes. Ops Works layers let you create blueprints for CC2 instances to install and configure any software that you want.

For more information on Opswork and Docker, please refer to the below link:

• https://aws.amazon.com/blogs/devops/running-docker-on-aws-opsworks/

NEW QUESTION 21

When one creates an encrypted EBS volume and attach it to a supported instance type ,which of the following data types are encrypted?

Choose 3 answers from the options below

- A. Dataat rest inside the volume

- B. Alldata copied from the EBS volume to S3

- C. Alldata moving between the volume and the instance

- D. Allsnapshots created from the volume

Answer: ACD

Explanation:

This is clearly given in the aws documentation. Amazon EBS Encryption

Amazon CBS encryption offers a simple encryption solution for your CBS volumes without the need to build, maintain, and secure your own key management infrastructure. When you create an encrypted CBS volume and attach it to a supported instance type, the following types of data are encrypted:

• Data at rest inside the volume

• All data moving between the volume and the instance

• All snapshots created from the volume

• All volumes created from those snapshots

For more information on CBS encryption, please refer to the below url http://docs.aws.a mazon.com/AWSCC2/latest/UserGuide/CBSCncryption.html

NEW QUESTION 22

You have written a CloudFormation template that creates 1 elastic load balancer fronting 2 EC2 instances. Which section of the template should you edit so that the DNS of the load balancer is returned upon creation of the stack?

- A. Resources

- B. Parameters

- C. Outputs

- D. Mappings

Answer: C

Explanation:

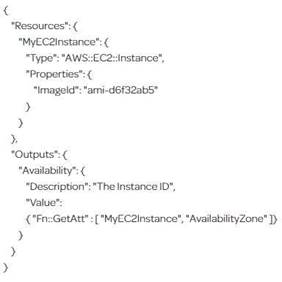

The below example shows a simple Cloud Formation template. It creates an CC2 instance based on the AMI - ami-d6f32ab5. When the instance is created, it will output the A2 in which it is created.

To understand more on Cloud Formation, please visit the URL:

• https://aws.amazon.com/cloudformation/

NEW QUESTION 23

You are in charge of designing a Cloudformation template which deploys a LAMP stack. After deploying a stack, you see that the status of the stack is showing as CREATE_COMPLETE, but the apache server is still not up and running and is experiencing issues while starting up. You want to ensure that the stack creation only shows the status of CREATE_COMPLETE after all resources defined in the stack are up and running. How can you achieve this?

Choose 2 answers from the options given below.

- A. Definea stack policy which defines that all underlying resources should be up andrunning before showing a status of CREATE_COMPLETE.

- B. Uselifecycle hooks to mark the completion of the creation and configuration of theunderlying resource.

- C. Usethe CreationPolicy to ensure it is associated with the EC2 Instance resource.

- D. Usethe CFN helper scripts to signal once the resource configuration is complete.

Answer: CD

Explanation:

The AWS Documentation mentions

When you provision an Amazon EC2 instance in an AWS Cloud Formation stack, you might specify additional actions to configure the instance, such as install software packages or bootstrap applications. Normally, CloudFormation proceeds with stack creation after the instance has been successfully created. However, you can use a Creation Pol icy so that CloudFormation proceeds with stack creation only after your configuration actions are done. That way you'll know your applications are ready to go after stack creation succeeds.

For more information on the Creation Policy, please visit the below url https://aws.amazon.com/blogs/devops/use-a-creationpolicy-to-wait-for-on-instance-configurations/

NEW QUESTION 24

You are designing a system which needs, at a minimum, 8 m4.large instances operating to service traffic. When designing a system for high availability in the us-east-1 region, which has 6 Availability Zones, your company needs to be able to handle the death of a full availability zone. How should you distribute the servers, to save as much cost as possible, assuming all of the EC2 nodes are properly linked to an ELB? Your VPC account can utilize us-east-1's AZ's a through f, inclusive.

- A. 3 servers in each of AZ's a through d, inclusiv

- B. 8 servers in each of AZ's a and b.

- C. 2 servers in each of AZ's a through e, inclusive.

- D. 4 servers in each of AZ's a through conclusive.

Answer: C

Explanation:

The best way is to distribute the instances across multiple AZ's to get the best and avoid a disaster scenario. With this scenario, you will always a minimum of more than 8 servers even if one AZ were to go down. Even though A and D are also valid options, the best option when it comes to distribution is Option C. For more information on High Availability and Fault tolerance, please refer to the below link:

https://media.amazonwebservices.com/architecturecenter/AWS_ac_ra_ftha_04.pdf

NEW QUESTION 25

You need to deploy a new application version to production. Because the deployment is high-risk, you need to roll the new version out to users over a number of hours, to make sure everything is working correctly. You need to be able to control the proportion of users seeing the new version of the application down to the percentage point. You use ELB and EC2 with Auto Scaling Groups and custom AMIs with your code pre-installed assigned to Launch Configurations. There are no data base- level changes during your deployment. You have been told you cannot spend too much money, so you must not increase the number of EC2 instances much at all during the deployment, but you also need to be able to switch back to the original version of code quickly if something goes wrong. What is the best way to meet these requirements?

- A. Create a second ELB, Auto Scaling Launch Configuration, and Auto Scaling Group using the Launch Configuratio

- B. Create AMIs with all code pre-installe

- C. Assign the new AMI to the second Auto Scaling Launch Configuratio

- D. Use Route53 Weighted Round Robin Records to adjust the proportion of traffic hitting the two ELBs.S

- E. Use the Blue-Green deployment method to enable the fastest possible rollback if neede

- F. Create a full second stack of instances and cut the DNS over to the new stack of instances, and change the DNS back if a rollback is needed.

- G. Create AMIs with all code pre-installe

- H. Assign the new AMI to the Auto Scaling Launch Configuration, to replace the old on

- I. Gradually terminate instances running the old code (launched with the old Launch Configuration) and allow the new AMIs to boot to adjust the traffic balance to the new cod

- J. On rollback, reverse the process by doing the same thing, but changing the AMI on the Launch Config back to the original code.

- K. Migrate to use AWS Elastic Beanstal

- L. Use the established and well-tested Rolling Deployment setting AWS provides on the new Application Environment, publishing a zip bundle of the new code and adjusting the wait period to spread the deployment over tim

- M. Re-deploy the old code bundle to rollback if needed.

Answer: A

Explanation:

This is an example of a Blue Green Deployment

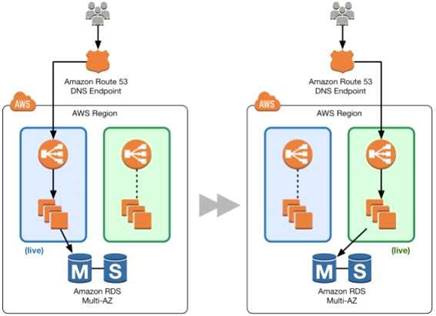

You can shift traffic all at once or you can do a weighted distribution. With Amazon Route 53, you can define a percentage of traffic to go to the green environment and gradually update the weights until the green environment carries the full production traffic. A weighted distribution provides the ability to perform canary analysis where a small percentage of production traffic is introduced to a new environment. You can test the new code and monitor for errors, limiting the blast radius if any issues are encountered. It also allows the green environment to scale out to support the full production load if you're using Elastic Load Balancing

For more information on Blue Green Deployments, please visit the below URL:

• https://dOawsstatic.com/whitepapers/AWS_Blue_Green_Deployments.pdf

NEW QUESTION 26

......

100% Valid and Newest Version DOP-C01 Questions & Answers shared by 2passeasy, Get Full Dumps HERE: https://www.2passeasy.com/dumps/DOP-C01/ (New 116 Q&As)