we provide Accurate Amazon-Web-Services DOP-C01 exam prep which are the best for clearing DOP-C01 test, and to get certified by Amazon-Web-Services AWS Certified DevOps Engineer- Professional. The DOP-C01 Questions & Answers covers all the knowledge points of the real DOP-C01 exam. Crack your Amazon-Web-Services DOP-C01 Exam with latest dumps, guaranteed!

Free DOP-C01 Demo Online For Amazon-Web-Services Certifitcation:

NEW QUESTION 1

You need to deploy a Node.js application and do not have any experience with AWS. Which deployment method will be the simplest for you to deploy?

- A. AWS Elastic Beanstalk

- B. AWSCIoudFormation

- C. AWS EC2

- D. AWSOpsWorks

Answer: A

Explanation:

With Elastic Beanstalk, you can quickly deploy and manage applications in the AWS Cloud without worrying about the infrastructure that runs those applications.

AWS Elastic Beanstalk reduces management complexity without restricting choice or control. You simply upload your application, and Elastic Beanstalk automatically handles the details of capacity provisioning, load balancing, scaling, and application health monitoring

For more information on Elastic beanstalk please refer to the below link:

• http://docs.aws.amazon.com/elasticbeanstalk/latest/dg/Welcome.html

NEW QUESTION 2

Which of the following can be configured as targets for Cloudwatch Events. Choose 3 answers from

the options given below

- A. AmazonEC2 Instances

- B. AWSLambda Functions

- C. AmazonCodeCommit

- D. AmazonECS Tasks

Answer: ABD

Explanation:

The AWS Documentation mentions the below

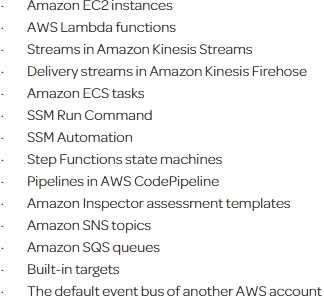

You can configure the following AWS sen/ices as targets for Cloud Watch Events

For more information on Cloudwatch events please see the below link:

• http://docs.aws.amazon.com/AmazonCloudWatch/latest/events/WhatlsCloudWatchEvents.htmI

NEW QUESTION 3

You have a set of EC2 Instances running behind an ELB. These EC2 Instances are launched via an Autoscaling Group. There is a requirement to ensure that the logs from the server are stored in a durable storage layer. This is so that log data can be analyzed by staff in the future. Which of the following steps can be implemented to ensure this requirement is fulfilled. Choose 2 answers from the options given below

- A. Onthe web servers, create a scheduled task that executes a script to rotate andtransmit the logs to an Amazon S3 bucke

- B. */

- C. UseAWS Data Pipeline to move log data from the Amazon S3 bucket to Amazon Redshiftin order to process and run reports V

- D. Onthe web servers, create a scheduled task that executes a script to rotate andtransmit the logs to Amazon Glacier.

- E. UseAWS Data Pipeline to move log data from the Amazon S3 bucket to Amazon SQS inorder to process and run reports

Answer: AB

Explanation:

Amazon S3 is the perfect option for durable storage. The AWS Documentation mentions the following on S3 Storage Amazon Simple Storage Service (Amazon S3) makes it simple and practical to collect, store, and analyze data - regardless of format - all at massive scale. S3 is object storage built to store and retrieve any amount of data from anywhere - web sites and mobile apps, corporate applications, and data from loT sensors or devices.

For more information on Amazon S3, please refer to the below URL:

• https://aws.amazon.com/s3/

Amazon Redshift is a fast, fully managed data warehouse that makes it simple and cost-effective to analyze all your data using standard SQL and your existing Business Intelligence (Bl) tools. It allows you to run complex analytic queries against petabytes of structured data, using sophisticated query optimization, columnar storage on high-performance local disks, and massively parallel query execution. Most results come back in seconds. For more information on Amazon Redshift, please refer to the below URL:

• https://aws.amazon.com/redshift/

NEW QUESTION 4

Your social media marketing application has a component written in Ruby running on AWS Elastic Beanstalk. This application component posts messages to social media sites in support of various marketing campaigns. Your management now requires you to record replies to these social media messages to analyze the effectiveness of the marketing campaign in comparison to past and future efforts. You've already developed a new application component to interface with the social media site APIs in order to read the replies. Which process should you use to record the social media replies in a durable data store that can be accessed at any time for analytics of historical data?

- A. Deploythe new application component in an Auto Scaling group of Amazon EC2 instances,read the data from the social media sites, store it with Amazon Elastic BlockStore, and use AWS Data Pipeline to publish it to Amazon Kinesis for analytics.

- B. Deploythe new application component as an Elastic Beanstalk application, read thedata from the social media sites, store it in DynamoDB, and use Apache Hivewith Amazon Elastic MapReduce for analytics.

- C. Deploythe new application component in an Auto Scaling group of Amazon EC2 instances,read the data from the social media sites, store it in Amazon Glacier, and useAWS Data Pipeline to publish it to Amazon RedShift for analytics.

- D. Deploythe new application component as an Amazon Elastic Beanstalk application, readthe data from the social media site, store it with Amazon Elastic Block store,and use Amazon Kinesis to stream the data to Amazon Cloud Watch for analytics

Answer: B

Explanation:

The AWS Documentation mentions the below

Amazon DynamoDB is a fast and flexible NoSQL database sen/ice for all applications that need consistent, single-digit millisecond latency at any scale. It is a fully managed cloud database and supports both document and key-value store models. Its flexible data model, reliable performance, and automatic scaling of throughput capacity, makes it a great fit for mobile, web, gaming, ad tech, loT, and many other applications.

For more information on AWS DynamoDB please see the below link:

• https://aws.amazon.com/dynamodb/

NEW QUESTION 5

You have an ELB on AWS which has a set of web servers behind them. There is a requirement that the SSL key used to encrypt data is always kept secure. Secondly the logs of ELB should only be decrypted by a subset of users. Which of these architectures meets all of the requirements?

- A. UseElastic Load Balancing to distribute traffic to a set of web server

- B. Toprotect the SSL private key.upload the key to the load balancer and configure the load balancer to offloadthe SSL traffi

- C. Write yourweb server logs to an ephemeral volume that has been encrypted using a randomlygenerated AES key.

- D. UseElastic Load Balancing to distribute traffic to a set of web server

- E. Use TCPIoad balancing on theload balancer and configure your web servers to retrieve the private key from aprivate Amazon S3bucket on boo

- F. Write your web server logs to a private Amazon S3 bucket usingAmazon S3 server- sideencryption.

- G. UseElastic Load Balancing to distribute traffic to a set of web servers, configurethe load balancer toperform TCP load balancing, use an AWS CloudHSM to perform the SSLtransactions, and write yourweb server logs to a private Amazon S3 bucket using Amazon S3 server-sideencryption.

- H. UseElastic Load Balancing to distribute traffic to a set of web server

- I. Configurethe load balancer toperform TCP load balancing, use an AWS CloudHSM to perform the SSLtransactions, and write yourweb server logs to an ephemeral volume that has been encrypted using a randomlygenerated AES key.

Answer: C

Explanation:

The AWS CIoudHSM service helps you meet corporate, contractual and regulatory compliance requirements for data security by using dedicated Hardware Security

Module (HSM) appliances within the AWS cloud. With CIoudHSM, you control the encryption keys and cryptographic operations performed by the HSM.

Option D is wrong with the CIoudHSM option because of the ephemeral volume which this is temporary storage

For more information on cloudhsm, please refer to the link:

• https://aws.amazon.com/cloudhsm/

NEW QUESTION 6

You have a web application hosted on EC2 instances. There are application changes which happen to the web application on a quarterly basis. Which of the following are example of Blue Green deployments which can be applied to the application? Choose 2 answers from the options given below

- A. Deploythe application to an elastic beanstalk environmen

- B. Have a secondary elasticbeanstalk environment in place with the updated application cod

- C. Use the swapURL's feature to switch onto the new environment.

- D. Placethe EC2 instances behind an EL

- E. Have a secondary environment with EC2lnstances and ELB in another regio

- F. Use Route53 with geo-location to routerequests and switch over to the secondary environment.

- G. Deploythe application using Opswork stack

- H. Have a secondary stack for the newapplication deploymen

- I. Use Route53 to switch over to the new stack for the newapplication update.

- J. Deploythe application to an elastic beanstalk environmen

- K. Use the Rolling updatesfeature to perform a Blue Green deployment.

Answer: AC

Explanation:

The AWS Documentation mentions the following

AWS Elastic Beanstalk is a fast and simple way to get an application up and running on AWS.6 It's perfect for developers who want to deploy code without worrying about managing the underlying infrastructure. Elastic Beanstalk supports Auto Scaling and Elastic Load Balancing, both of which enable blue/green deployment.

Elastic Beanstalk makes it easy to run multiple versions of your application and provides capabilities to swap the environment URLs, facilitating blue/green deployment.

AWS OpsWorks is a configuration management service based on Chef that allows customers to deploy and manage application stacks on AWS.7 Customers can specify resource and application configuration, and deploy and monitor running resources. OpsWorks simplifies cloning entire stacks when you're preparing blue/green environments.

For more information on Blue Green deployments, please refer to the below link:

• https://dO3wsstatic.com/whitepapers/AWS_Blue_Green_Deployments.pdf

NEW QUESTION 7

There is a requirement to monitor API calls against your AWS account by different users and entities. There needs to be a history of those calls. The history of those calls are needed in in bulk for later review. Which 2 services can be used in this scenario

- A. AWS Config; AWS Inspector

- B. AWS CloudTrail; AWS Config

- C. AWS CloudTrail; CloudWatch Events

- D. AWS Config; AWS Lambda

Answer: C

Explanation:

You can use AWS CloudTrail to get a history of AWS API calls and related events for your account. This history includes calls made with the AWS Management

Console, AWS Command Line Interface, AWS SDKs, and other AWS services. For more information on Cloudtrail, please visit the below URL:

• http://docs.aws.a mazon.com/awscloudtrail/latest/userguide/cloudtrai l-user-guide.html

Amazon Cloud Watch Cvents delivers a near real-time stream of system events that describe changes in Amazon Web Services (AWS) resources. Using simple rules that you can quickly set up, you can match events and route them to one or more target functions or streams. Cloud Watch Cvents becomes aware of operational changes as they occur. Cloud Watch Cvents responds to these operational changes and takes corrective action as necessary, by sending messages to respond to the environment, activating functions, making changes, and capturing state information. For more information on Cloud watch events, please visit the below U RL:

• http://docs.aws.a mazon.com/AmazonCloudWatch/latest/events/Whatl sCloudWatchCvents.html

NEW QUESTION 8

Your company is planning on using the available services in AWS to completely automate their integration, build and deployment process. They are planning on using AWSCodeBuild to build their artefacts. When using CodeBuild, which of the following files specifies a collection of build commands that can be used by the service during the build process.

- A. appspec.yml

- B. buildspec.yml

- C. buildspecxml

- D. appspec.json

Answer: B

Explanation:

The AWS documentation mentions the following

AWS CodeBuild currently supports building from the following source code repository providers. The source code must contain a build specification (build spec) file,

or the build spec must be declared as part of a build project definition. A buildspec\s a collection of build commands and related settings, in YAML format, that AWS

CodeBuild uses to run a build.

For more information on AWS CodeBuild, please refer to the below link: http://docs.aws.amazon.com/codebuild/latest/userguide/planning.html

NEW QUESTION 9

You have an Opswork stack defined with Linux instances. You have executed a recipe, but the execution has failed. What is one of the ways that you can use to diagnose what was the reason why the recipe did not execute correctly.

- A. UseAWS Cloudtrail and check the Opswork logs to diagnose the error

- B. UseAWS Config and check the Opswork logs to diagnose the error

- C. Logintotheinstanceandcheckiftherecipewasproperlyconfigured.

- D. Deregisterthe instance and check the EC2 Logs

Answer: C

Explanation:

The AWS Documentation mentions the following

If a recipe fails, the instance will end up in the setup_failed state instead of online. Even though the instance is not online as far as AWS Ops Works Stacks is concerned, the CC2 instance is running and it's often useful to log in to troubleshoot the issue. For example, you can check whether an application or custom

cookbook is correctly installed. The AWS Ops Works Stacks built-in support for SSH and RDP login is

available only for instances in the online state.

For more information on Opswork troubleshooting, please visit the below URL: http://docs.aws.amazon.com/opsworks/latest/userguide/troubleshoot-debug-login.htmI

NEW QUESTION 10

You have an application hosted in AWS, which sits on EC2 Instances behind an Elastic Load Balancer. You have added a new feature to your application and are now receving complaints from users that the site has a slow response. Which of the below actions can you carry out to help you pinpoint the issue

- A. Use Cloudtrail to log all the API calls, and then traverse the log files to locate the issue

- B. Use Cloudwatch, monitor the CPU utilization to see the times when the CPU peaked

- C. Reviewthe Elastic Load Balancer logs

- D. Create some custom Cloudwatch metrics which are pertinent to the key features of your application

Answer: D

Explanation:

Since the issue is occuring after the new feature has been added, it could be relevant to the new feature.

Enabling Cloudtrail will just monitor all the API calls of all services and will not benefit the cause.

The monitoring of CPU utilization will just reverify that there is an issue but will not help pinpoint the issue.

The Elastic Load Balancer logs will also just reverify that there is an issue but will not help pinpoint the issue.

For more information on custom Cloudwatch metrics, please refer to the below link: http://docs.aws.amazon.com/AmazonCloudWatch/latest/monitoring/publishingMetrics.html

NEW QUESTION 11

Your team wants to begin practicing continuous delivery using CloudFormation, to enable automated builds and deploys of whole, versioned stacks or stack layers. You have a 3-tier, mission-critical system. Which of the following is NOT a best practice for using CloudFormation in a continuous delivery environment?

- A. Use the AWS CloudFormation ValidateTemplate call before publishing changes to AWS.

- B. Model your stack in one template, so you can leverage CloudFormation's state management and dependency resolution to propagate all changes.

- C. Use CloudFormation to create brand new infrastructure for all stateless resources on each push, and run integration tests on that set of infrastructure.

- D. Parametrize the template and use Mappings to ensure your template works in multiple Regions.

Answer: B

Explanation:

Answer - B

Some of the best practices for Cloudformation are

• Created Nested stacks

As your infrastructure grows, common patterns can emerge in which you declare the same components in each of your templates. You can separate out these common components and create dedicated templates for them. That way, you can mix and match different templates but use nested stacks to create a single, unified stack. Nested stacks are stacks that create other stacks. To create nested stacks, use the AWS::CloudFormation::Stackresource in your template to reference other templates.

• Reuse Templates

After you have your stacks and resources set up, you can reuse your templates to replicate your infrastructure in multiple environments. For example, you can create environments for development, testing, and production so that you can test changes before implementing them into production. To make templates reusable, use the parameters, mappings, and conditions sections so that you can customize your stacks when you create them. For example, for your development environments, you can specify a lower-cost instance type compared to your production environment, but all other configurations and settings remain the same. For more information on Cloudformation best practises, please visit the below URL: http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/best-practices.html

NEW QUESTION 12

You currently have an application with an Auto Scalinggroup with an Elastic Load Balancer configured in AWS. After deployment users are complaining of slow response time for your application. Which of the following can be used as a start to diagnose the issue

- A. Use Cloudwatch to monitor the HealthyHostCount metric

- B. Use Cloudwatch to monitor the ELB latency

- C. Use Cloudwatch to monitor the CPU Utilization

- D. Use Cloudwatch to monitor the Memory Utilization

Answer: B

Explanation:

High latency on the ELB side can be caused by several factors, such as:

• Network connectivity

• ELB configuration

• Backend web application server issues

For more information on ELB latency, please refer to the below link:

• https://aws.amazon.com/premiumsupport/knowledge-center/elb-latency-troubleshooting/

NEW QUESTION 13

Your company is getting ready to do a major public announcement of a social media site on AWS. The website is running on EC2 instances deployed across multiple Availability Zones with a Multi-AZ RDS MySQL Extra Large DB Instance. The site performs a high number of small reads and writes per second and relies on an eventual consistency model. After comprehensive tests you discover that there is read contention on RDS MySQL. Which are the best approaches to meet these requirements? Choose 2 answers from the options below

- A. Deploy ElasticCache in-memory cache running in each availability zone

- B. Implement sharding to distribute load to multiple RDS MySQL instances

- C. Increase the RDS MySQL Instance size and Implement provisioned IOPS

- D. Add an RDS MySQL read replica in each availability zone

Answer: AD

Explanation:

Implement Read Replicas and Clastic Cache

Amazon RDS Read Replicas provide enhanced performance and durability for database (DB) instances. This replication feature makes it easy to elastically scale out beyond the capacity constraints of a single DB Instance for read-heavy database workloads. You can create one or more replicas of a given source DB Instance and serve high-volume application read traffic from multiple copies of your data, thereby increasing aggregate read throughput.

For more information on Read Replica's, please visit the below link:

• https://aws.amazon.com/rds/details/read-replicas/

Amazon OastiCache is a web service that makes it easy to deploy, operate, and scale an in-memory data store or cache in the cloud. The service improves the performance of web applications by allowing you to retrieve information from fast, managed, in- memory data stores, instead of relying entirely on slower disk-based databases.

For more information on Amazon OastiCache, please visit the below link:

• https://aws.amazon.com/elasticache/

NEW QUESTION 14

You have an Autoscaling Group configured to launch EC2 Instances for your application. But you notice that the Autoscaling Group is not launching instances in the right proportion. In fact instances are being launched too fast. What can you do to mitigate this issue? Choose 2 answers from the options given below

- A. Adjust the cooldown period set for the Autoscaling Group

- B. Set a custom metric which monitors a key application functionality forthe scale-in and scale-out process.

- C. Adjust the CPU threshold set for the Autoscaling scale-in and scale-out process.

- D. Adjust the Memory threshold set forthe Autoscaling scale-in and scale-out process.

Answer: AB

Explanation:

The Auto Scaling cooldown period is a configurable setting for your Auto Scaling group that helps to ensure that Auto Scaling doesn't launch or terminate additional instances before the previous scaling activity takes effect.

For more information on the cool down period, please refer to the below link:

• http://docs^ws.a mazon.com/autoscaling/latest/userguide/Cooldown.html

Also it is better to monitor the application based on a key feature and then trigger the scale-in and scale-out feature accordingly. In the question, there is no mention of CPU or memory causing the issue.

NEW QUESTION 15

Your development team wants account-level access to production instances in order to do live debugging of a highly secure environment. Which of the following should you do?

- A. Place the credentials provided by Amazon Elastic Compute Cloud (EC2) into a secure Amazon Sample Storage Service (S3) bucket with encryption enable

- B. Assign AWS Identity and Access Management (1AM) users to each developer so they can download the credentials file.

- C. Place an internally created private key into a secure S3 bucket with server-side encryption using customer keys andconfiguration management, create a service account on al I the instances using this private key, and assign I AM users to each developer so they can download the fi le.

- D. Place each developer's own public key into a private S3 bucket, use instance profiles and configuration management to create a user account for each developer on all instances, and place the user's public keys into the appropriate accoun

- E. ^/

- F. Place the credentials provided by Amazon EC2 onto an MFA encrypted USB drive, and physically share it with each developer so that the private key never leaves the office.

Answer: C

Explanation:

An instance profile is a container for an 1AM role that you can use to pass role information to an CC2 instance when the instance starts.

A private S3 bucket can be created for each developer, the keys can be stored in the bucket and then assigned to the instance profile.

Option A and D are invalid, because the credentials should not be provided by a AWS EC2 Instance. Option B is invalid because you would not create a service account, instead you should create an instance profile.

For more information on Instance profiles, please refer to the below document link: from AWS

• http://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_use_switch-ro le-ec2_instance- profiles.htm I

NEW QUESTION 16

You recently encountered a major bug in your web application during a deployment cycle. During this failed deployment, it took the team four hours to roll back to a previously working state, which left customers with a poor user experience. During the post-mortem, you team discussed the need to provide a quicker, more robust way to roll back failed deployments. You currently run your web application on Amazon EC2 and use Elastic Load Balancingforyour load balancing needs.

Which technique should you use to solve this problem?

- A. Createdeployable versioned bundles of your applicatio

- B. Store the bundle on AmazonS3. Re- deploy your web application on Elastic Beanstalk and enable the ElasticBeanstalk auto - rollbackfeature tied to Cloud Watch metrics that definefailure.

- C. Usean AWS OpsWorks stack to re-deploy your web application and use AWS OpsWorksDeploymentCommand to initiate a rollback during failures.

- D. Createdeployable versioned bundles of your applicatio

- E. Store the bundle on AmazonS3. Use an AWS OpsWorks stack to redeploy your web application and use AWSOpsWorks application versioningto initiate a rollback during failures.

- F. UsingElastic BeanStalk redeploy your web application and use the Elastic BeanStalkAPI to trigger a FailedDeployment API call to initiate a rollback to theprevious version.

Answer: B

Explanation:

The AWS Documentation mentions the following

AWS DeploymentCommand has a rollback option in it. Following commands are available for apps to use:

deploy: Deploy App.

Ruby on Rails apps have an optional args parameter named migrate. Set Args to {"migrate":["true"]) to migrate the database.

The default setting is {"migrate": ["false"]).

The "rollback" feature Rolls the app back to the previous version.

When we are updating an app, AWS OpsWorks stores the previous versions, maximum of upto five versions.

We can use this command to roll an app back as many as four versions. Reference Link:

• http://docs^ws.amazon.com/opsworks/latest/APIReference/API_DeploymentCommand.html

NEW QUESTION 17

You are designing a cloudformation stack which involves the creation of a web server and a database server. You need to ensure that the web server in the stack gets created after the database server is created. How can you achieve this?

- A. Ensurethat the database server is defined first and before the web server in thecloudformation templat

- B. The stack creation normally goes in order to create the resources.

- C. Ensurethat the database server is defined as a child of the web server in thecloudformation template.

- D. Ensurethat the web server is defined as a child of the database server in thecloudformation template.

- E. Usethe DependsOn attribute to ensure that the database server is created before the web server.

Answer: D

Explanation:

The AWS Documentation mentions

With the DependsOn attribute you can specify that the creation of a specific resource follows another. When you add a DependsOn attribute to a resource, that resource is created only after the creation of the resource specified in the DependsOn attribute.

For more information on the DependsOn attribute, please visit the below url http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/aws-attribute-dependson.html

NEW QUESTION 18

Your company has a set of EC2 Instances that access data objects stored in an S3 bucket. Your IT Security department is concerned about the security of this arhitecture and wants you to implement the following

1) Ensure that the EC2 Instance securely accesses the data objects stored in the S3 bucket

2) Ensure that the integrity of the objects stored in S3 is maintained.

Which of the following would help fulfil the requirements of the IT Security department. Choose 2 answers from the options given below

- A. Createan IAM user and ensure the EC2 Instances uses the IAM user credentials toaccess the data in the bucket.

- B. Createan IAM Role and ensure the EC2 Instances uses the IAM Role to access the datain the bucket.

- C. UseS3 Cross Region replication to replicate the objects so that the integrity ofdata is maintained.

- D. Usean S3 bucket policy that ensures that MFA Delete is set on the objects in thebucket

Answer: BD

Explanation:

The AWS Documentation mentions the following

I AM roles are designed so that your applications can securely make API requests from your instances, without requiring you to manage the security credentials that the applications use. Instead of creating and distributing your AWS credentials, you can delegate permission to make API requests using 1AM roles

For more information on 1AM Roles, please refer to the below link:

• http://docs.aws.a mazon.com/AWSCC2/latest/UserGuide/iam-roles-for-amazon-ec2. htmI

MFS Delete can be used to add another layer of security to S3 Objects to prevent accidental deletion of objects. For more information on MFA Delete, please refer to the below link:

• https://aws.amazon.com/blogs/security/securing-access-to-aws-using-mfa-part-3/

NEW QUESTION 19

Your system automatically provisions EIPs to EC2 instances in a VPC on boot. The system provisions the whole VPC and stack at once. You have two of them per VPC. On your new AWS account, your attempt to create a Development environment failed, after successfully creating Staging and Production environments in the same region. What happened?

- A. You didn't choose the Development version of the AMI you are using.

- B. You didn't set the Development flag to true when deploying EC2 instances.

- C. You hit the soft limit of 5 EIPs per region and requested a 6th.

- D. You hit the soft limit of 2 VPCs per region and requested a 3rd.

Answer: C

Explanation:

The most likely cause is the fact you have hit the maximum of 5 Elastic IP's per region.

By default, all AWS accounts are limited to 5 Clastic IP addresses per region, because public (IPv4) Internet addresses are a scarce public resource. We strongly encourage you to use an Elastic IP address primarily for the ability to remap the address to another instance in the case of instance failure, and to use DNS hostnames for all other inter-node communication.

Option A is invalid because a AMI does not have a Development version tag. Option B is invalid because there is no flag for an CC2 Instance

Option D is invalid because there is a limit of 5 VPCs per region. For more information on Clastic IP's, please visit the below URL:

• http://docs.aws.amazon.com/AWSCC2/latest/UserGuide/elastic-i p-addresses-eip.html

NEW QUESTION 20

You have the following application to be setup in AWS

1) A web tier hosted on EC2 Instances

2) Session data to be written to DynamoDB

3) Log files to be written to Microsoft SQL Server

How can you allow an application to write data to a DynamoDB table?

- A. Add an 1AM user to a running EC2 instance.

- B. Add an 1AM user that allows write access to the DynamoDB table.

- C. Create an 1AM role that allows read access to the DynamoDB table.

- D. Create an 1AM role that allows write access to the DynamoDB table.

Answer: D

Explanation:

I AM roles are designed so that your applications can securely make API requests from your instances, without requiring you to manage the security credentials that

the applications use. Instead of creating and distributing your AWS credentials For more information on 1AM Roles please refer to the below link:

http://docs.aws.amazon.com/AWSCC2/latest/UserGuide/iam-roles-for-amazon-ec2.html

NEW QUESTION 21

You have deployed an application to AWS which makes use of Autoscaling to launch new instances. You now want to change the instance type for the new instances. Which of the following is one of the action items to achieve this deployment?

- A. Use Elastic Beanstalk to deploy the new application with the new instance type

- B. Use Cloudformation to deploy the new application with the new instance type

- C. Create a new launch configuration with the new instance type

- D. Create new EC2 instances with the new instance type and attach it to the Autoscaling Group

Answer: C

Explanation:

The ideal way is to create a new launch configuration, attach it to the existing Auto Scaling group, and terminate the running instances.

Option A is invalid because Clastic beanstalk cannot launch new instances on demand. Since the current scenario requires Autoscaling, this is not the ideal option

Option B is invalid because this will be a maintenance overhead, since you just have an Autoscaling Group. There is no need to create a whole Cloudformation

template for this.

Option D is invalid because Autoscaling Group will still launch CC2 instances with the older launch configuration

For more information on Autoscaling Launch configuration, please refer to the below document link: from AWS

http://docs.aws.amazon.com/autoscaling/latest/userguide/l_aunchConfiguration.html

NEW QUESTION 22

As an architect you have decided to use CloudFormation instead of OpsWorks or Elastic Beanstalk for deploying the applications in your company. Unfortunately, you have discovered that there is a

resource type that is not supported by CloudFormation. What can you do to get around this.

- A. Specify more mappings and separate your template into multiple templates by using nested stacks.

- B. Create a custom resource type using template developer, custom resource template, and CloudFormatio

- C. */

- D. Specify the custom resource by separating your template into multiple templates by using nested stacks.

- E. Use a configuration management tool such as Chef, Puppet, or Ansible.

Answer: B

Explanation:

Custom resources enable you to write custom provisioning logic in templates that AWS Cloud Formation runs anytime you create, update (if you changed the custom resource), or delete stacks. For example, you might want to include resources that aren't available as AWS Cloud Formation resource types. You can include those resources by using custom resources. That way you can still manage all your related resources in a single stack.

For more information on custom resources in Cloudformation please visit the below URL:

◆ http://docs.aws.amazon.com/AWSCIoudFormation/latest/UserGuide/template-custom- resources.htm I

NEW QUESTION 23

You are a Devops Engineer for your company. You are in charge of an application that uses EC2, ELB and Autoscaling. You have been requested to get the ELB access logs. When you try to access the logs, you can see that nothing has been recorded in S3. Why is this the case?

- A. Youdon't have the necessary access to the logs generated by ELB.

- B. Bydefault ELB access logs are disabled.

- C. TheAutoscaling service is not sending the required logs to ELB

- D. TheEC2 Instances are not sending the required logs to ELB

Answer: B

Explanation:

The AWS Documentation mentions

Access logging is an optional feature of Elastic Load Balancing that is disabled by default. After you enable access logging for your load balancer. Clastic Load

Balancing captures the logs and stores them in the Amazon S3 bucket that you specify. You can disable access logging at any time.

For more information on L~LB access logs please see the below link:

• http://docs.aws.amazon.com/elasticloadbalancing/latest/classic/access-log-collection.html

NEW QUESTION 24

One of the instances in your Auto Scaling group health check returns the status of Impaired to Auto Scaling. What will Auto Scaling do in this case.

- A. Terminate the instance and launch a new instance

- B. Send an SNS notification

- C. Perform a health check until cool down before declaring that the instance has failed

- D. Wait for the instance to become healthy before sending traffic

Answer: A

Explanation:

Auto Scaling periodically performs health checks on the instances in your Auto Scaling group and identifies any instances that are unhealthy. You can configure Auto Scaling to determine the health status of an instance using Amazon EC2 status checks. Clastic Load Balancing health checks, or custom health checks

By default. Auto Scaling health checks use the results of the CC2 status checks to determine the health status of an instance. Auto Scaling marks an instance as

unhealthy if its instance fails one or more of the status checks.

For more information monitoring in Autoscaling, please visit the below URL: http://docs.aws.a mazon.com/autoscaling/latest/userguide/as-mon itoring-features.html

NEW QUESTION 25

You are hired as the new head of operations for a SaaS company. Your CTO has asked you to make debugging any part of your entire operation simpler and as fast as possible. She complains that she has no idea what is going on in the complex, service-oriented architecture, because the developers just log to disk, and it's very hard to find errors in logs on so many services. How can you best meet this requirement and satisfy your CTO?

- A. Copy all log files into AWS S3 using a cron job on each instanc

- B. Use an S3 Notification Configuration on the PutBucket event and publish events to AWS Lambd

- C. Use the Lambda to analyze logs as soon as they come in and flag issues.

- D. Begin using CloudWatch Logs on every servic

- E. Stream all Log Groups into S3 object

- F. Use AWS EMR clusterjobs to perform adhoc MapReduce analysis and write new queries when needed.

- G. Copy all log files into AWS S3 using a cron job on each instanc

- H. Use an S3 Notification Configuration on the PutBucket event and publish events to AWS Kinesi

- I. Use Apache Spark on AWS EMR to perform at-scale stream processing queries on the log chunks and flag issues.

- J. Begin using CloudWatch Logs on every servic

- K. Stream all Log Groups into an AWS Elastic search Service Domain running Kibana 4 and perform log analysis on a search cluster.

Answer: D

Explanation:

Amazon Dasticsearch Service makes it easy to deploy, operate, and scale dasticsearch for log analytics, full text search, application monitoring, and more. Amazon

Oasticsearch Service is a fully managed service that delivers Dasticsearch's easy-to-use APIs and real- time capabilities along with the availability, scalability, and security required by production workloads. The service offers built-in integrations with Kibana, Logstash, and AWS services including Amazon Kinesis Firehose, AWS Lambda, and Amazon Cloud Watch so that you can go from raw data to actionable insights quickly. For more information on Elastic Search, please refer to the below link:

• https://aws.amazon.com/elasticsearch-service/

NEW QUESTION 26

......

Recommend!! Get the Full DOP-C01 dumps in VCE and PDF From Thedumpscentre.com, Welcome to Download: https://www.thedumpscentre.com/DOP-C01-dumps/ (New 116 Q&As Version)